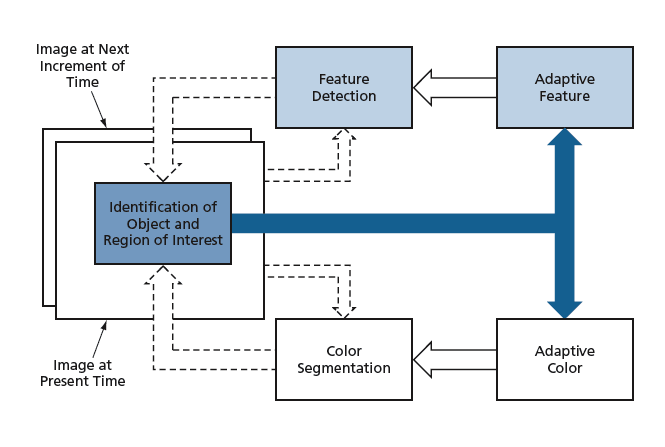

An improved adaptive method of processing image data in an artificial neural network has been developed to enable automated, real-time recognition of possibly moving objects under changing (including suddenly changing) conditions of illumination and perspective. The method involves a combination of two prior object-recognition methods — one based on adaptive detection of shape features and one based on adaptive color segmentation — to enable recognition in situations in which either prior method by itself may be inadequate.

One of the results of the interaction is to increase, beyond what would otherwise be possible, the accuracy of the determination of a region of interest (containing an object that one seeks to recognize) within an image. Another result is to provide a minimized adaptive step that can be used to update the results obtained by the two component methods when changes of color and apparent shape occur. The net effect is to enable the neural network to update its recognition output and improve its recognition capability via an adaptive learning sequence.

In principle, the improved method could readily be implemented in integrated circuitry to make a compact, low-power, real-time object-recognition system. It has been proposed to demonstrate the feasibility of such a system by integrating a 256-by-256 active-pixel sensor with APCA, ACOSE, and neural processing circuitry on a single chip. It has been estimated that such a system on a chip would have a volume no larger than a few cubic centimeters, could operate at a rate as high as 1,000 frames per second, and would consume in the order of milliwatts of power.

This work was done by Tuan Duong, Vu Duong, and Allen Stubberud of Caltech for NASA's Jet Propulsion Laboratory.

In accordance with Public Law 96-517, the contractor has elected to retain title to this invention. Inquiries concerning rights for its commercial use should be addressed to:

Innovative Technology Assets

Management

JPL

Mail Stop 202-233

4800 Oak Grove Drive

Pasadena, CA 91109-8099

(818) 354-2240

E-mail:

Refer to NPO-41370, volume and number of this NASA Tech Briefs issue, and the page number.

This Brief includes a Technical Support Package (TSP).

Object-Recognition Using Feature-and- Color-Based Methods

(reference NPO-41370) is currently available for download from the TSP library.

Don't have an account?

Overview

The document discusses an innovative approach to dynamic object recognition using a combination of feature-based and color-based methods, specifically designed for real-time applications in challenging environments, such as NASA's planetary landing missions. The primary focus is on the Adaptive Principal Component Analysis (APCA) and Adaptive Color Segmentation (ACOSE) technologies, which work together to enhance recognition capabilities in real-time.

The problem addressed is the need for effective landing site identification during the landing phase of missions, where sudden changes in light intensity and object shapes can complicate recognition tasks. Traditional methods often struggle with these challenges, leading to the proposal of a closed-loop technique that integrates both adaptive feature-based and color-based methods. This dual approach aims to optimize object recognition in dynamic environments, where a single technique may be insufficient.

The document highlights the advantages of implementing these technologies in a system-on-a-chip format, integrating a 256x256 RGB Active Pixel Sensor (APS), APCA, and ACOSE into a compact, lightweight system. This integration is expected to facilitate real-time adaptive processing, achieving high speeds of up to 1000 frames per second while maintaining low power consumption and minimizing size. Such a system would be particularly beneficial for NASA and Department of Defense (DoD) applications, where performance and efficiency are critical.

The novelty of this approach lies in its ability to create a closed-loop interaction between the adaptive methods, allowing for optimal identification of regions of interest even when objects undergo changes in position, rotation, or texture. The document emphasizes that this innovative technique can significantly enhance the exploration capabilities of NASA missions by reducing costs and improving the reliability of landing site identification.

In summary, the document presents a comprehensive overview of a cutting-edge object recognition system that leverages adaptive technologies to address the complexities of real-time image processing in dynamic environments. By combining feature-based and color-based methods, the proposed solution aims to improve the effectiveness of landing site identification for planetary missions, showcasing advancements in aerospace technology that have broader applications in various fields.