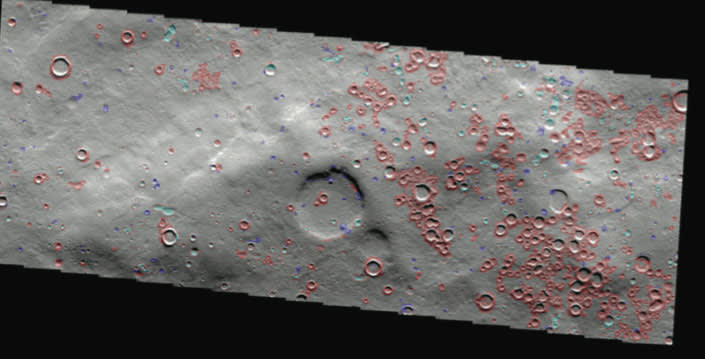

NASA’s planetary missions have collected, and continue to collect, massive volumes of orbital imagery. The volume is such that it is difficult to manually review all of the data and determine its significance. As a result, images are indexed and searchable by location and date but generally not by their content. A new automated method analyzes images and identifies “landmarks,” or visually salient features such as gullies, craters, dust devil tracks, and the like. This technique uses a statistical measure of salience derived from information theory, so it is not associated with any specific landmark type. It identifies regions that are unusual or that stand out from their surroundings, so the resulting landmarks are context-sensitive areas that can be used to recognize the same area when it is encountered again.

This method enables fast, automated identification of landmarks to augment or replace manual analysis; fast, automated classification of landmarks to provide semantic annotations; and content-based searches over image archives.

Automated landmark detection in images permits the creation of a summary catalog of all such features in an image database, such as the Planetary Data System (PDS). It could enable entirely new searches for PDS images, based on the desired content (landmark types). In the near future, landmark identification methods using Gabor filters (texture) or covariance descriptors will also be investigated for this application.

This work was done by Kiri L. Wagstaff and Julian Panetta of Caltech; Norbert Schorghofer of the University of Hawaii; and Ronald Greeley, Mary Pendleton Hoffer, and Melissa Bunte of Arizona State University for NASA’s Jet Propulsion Laboratory. For more information, download the Technical Support Package (free white paper) at www.techbriefs.com/tsp under the Information Sciences category. NPO-46674

This Brief includes a Technical Support Package (TSP).

Landmark Detection In Orbital Images Using Salience Histograms

(reference NPO-46674) is currently available for download from the TSP library.

Don't have an account?

Overview

The document titled "Landmark Detection in Orbital Images Using Salience Histograms" presents research conducted by a team from NASA's Jet Propulsion Laboratory (JPL) and partner institutions, focusing on the automated detection and classification of landmarks in Martian orbital imagery. The study aims to enhance the understanding of Martian surface features by employing advanced image processing techniques.

The research outlines a systematic approach to landmark detection, which involves computing statistical salience at each pixel in an image. This process utilizes intensity histograms from sliding windows to identify significant features, such as craters, dark slope streaks, and dust devil tracks. The document emphasizes the importance of context-sensitive landmark identification, where landmarks are evaluated based on their surroundings rather than predefined criteria.

The study evaluates the performance of various classification methods, including Naive Bayes, Support Vector Machines, Neural Networks, and Decision Trees, achieving high accuracy rates in classifying manually annotated landmarks. The classifiers are trained using a dataset of 788 landmarks, which includes 41 craters, 91 dark slope streaks, and 656 dust devil tracks. The results indicate that while automated landmark detection is more challenging, the classification of manually outlined landmarks is highly reliable.

Key attributes for landmark classification are computed, including albedo, size, shape, and ruggedness. The document details the methods used to derive these attributes, such as mean albedo, area, perimeter, and eccentricity, which are essential for distinguishing between different types of landmarks.

The research also highlights future directions, including improving landmark detection techniques by exploring texture-based and covariance-descriptor approaches. Additionally, the team aims to perform change detection in overlapping images to identify new, vanished, or altered landmarks over time.

Overall, the document underscores the significance of automated landmark detection in enhancing the analysis of Martian surface features, facilitating content-based searches in existing data archives, and contributing to the broader goals of planetary exploration. The findings are expected to have implications not only for Mars research but also for other planetary bodies, showcasing the potential of advanced image processing techniques in space science.