In September, after several months of evaluating the market, “Honda Xcelerator Ventures” — the automotive manufacturer’s startup investment subsidiary — made a major investment award to California-based silicon photonics startup SiLC Technologies, Inc., to develop next generation Frequency-Modulated Continuous Wave (FMCW) LiDAR for “all types of mobility.”

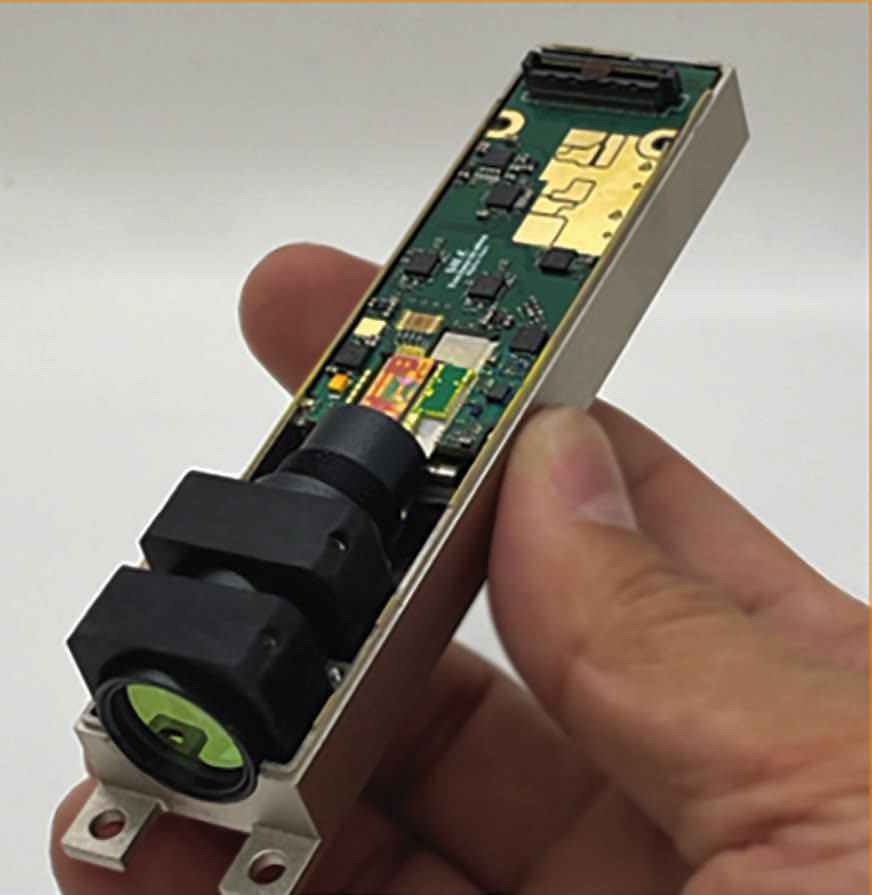

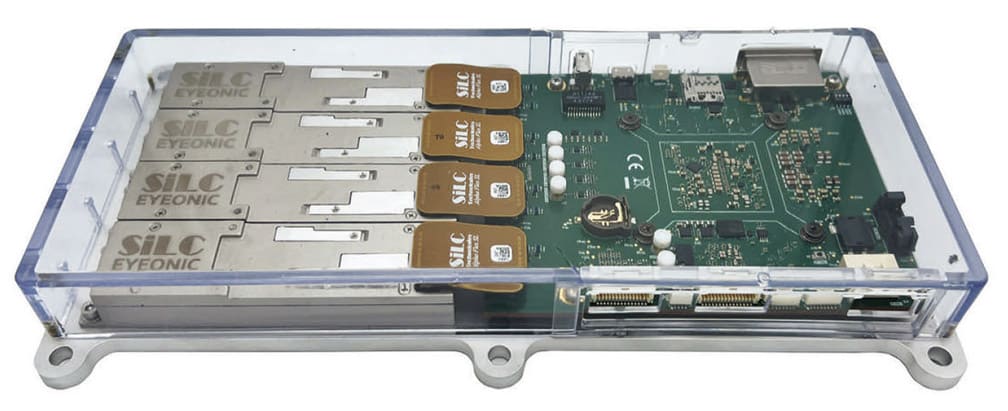

While California-based startup SiLC was first established in 2018, the silicon photonics design and manufacturing experience behind the company’s executive team dates back several decades, and includes ownership of a proprietary silicon-based semiconductor fabrication process that has already led to the introduction of several new LiDAR products from SiLC over the last two years, including the Eyeonic Vision System Sensor and the Eyeonic Vision System Mini unveiled earlier this year.

SiLC describes FMCW LiDAR as a type of LiDAR that uses frequency-modulated laser light to measure the distance and velocity of objects. A case study recently published by Advanced Micro Devices (AMD) further describes how SiLC’s Eyeonic vision sensor is built on the AMD Zynq UltraScale+ RFSoC that provides the analog to digital converters needed for up to four total Eyeonic sensors. AMD’s UltraScale+ RFSoC also provides the front-end circuits the sensor needs to drive lasers, detect analog photonic signals and convert them to digital.

In a Sept. 10, 2024 press release announcing Honda’s new investment into SiLC, Manabu Ozawa, Managing Executive Officer, Honda Motor Co., describes the company as an “industry leader” in the research and development of FMCW LiDAR. “Honda is striving for zero traffic collision fatalities involving our motorcycles and automobiles globally by 2050. We believe that SiLC’s advanced LiDAR technology will become an important technology for us,” Ozawa said.

Shortly after the Honda investment announcement, Photonics & Imaging Technology (P&IT) caught up with Dr. Mehdi Asghari, SiLC Technologies CEO, to discuss their approach to designing and manufacturing FMCW LiDAR technology.

P&IT: SiLC was first established in 2018. What has been the company’s approach to manufacturing and fabricating FMCW Li-DAR technology that has allowed it to achieve the type of growth, expansion and partnerships you’re seeing six years later?

Mehdi Asghari: Our key competency is silicon photonics, integrating optics within a silicon chip using CMOS manufacturing processes to make optics that are cheap and manufacturable and scalable. The founders of the company have been doing this for about 30 years. I was the vice president of engineering at the first company ever to do silicon photonics commercially, a company called Bookham. When I first started SiLC in 2018, we decided to focus on imaging, which is really just an analog sensing application and that is the heritage of our team’s core competencies. We decided that any IP-sensitive activity should be done in the U.S. or one of the allied countries, so most of our manufacturing is done in our own facility. The only thing we don’t do at SiLC is wafer fab.

However, in our previous companies, from Bookham to Kotura to Mellanox, we always had a fab. So through those connections, I hired a fabrication partner, and we do have our own fab experts, and we define the fab process. Our contract does not allow our fab partner to make silicon photonics for anyone else, the process is dedicated to SiLC, we own the process design kit (PDK) and the process that is not available in any other fab, there is no fab on the planet that can produce FMCW LiDAR products using our process.

P&IT: Why is the single-chip design of your FMCW LiDAR ideal for the mobility applications that Honda is eyeing with their investment?

Asghari: SiLC has a unique photonic integration platform. In addition to manufacturing volume and scalability, the design of our platform is ideal for the type of complexity that comes with future mobility applications like autonomous vehicles. We’re effectively the only company that is able to place all of the photonic components necessary for an autonomous application on a single chip. If you’re able to do that on a single chip, regardless of how many channels you require, that means you can scale. In contrast, if you have many chips or a multi-chip system architecture, you have to worry about coupling them together with all the optics and lenses and then it becomes difficult to scale.

That is one of the main reasons why Honda invested in us. They spent months investigating and even testing the platforms of different companies and they did their due diligence. That led to their realization that we have the only design and integration platform that allows you to place all of the complexity necessary for FMCW LiDAR on one chip and therefore achieve scale.

P&IT: In announcing the new investment from Honda, SiLC’s website notes that current radar and 2D/3D vision systems struggle with detecting dark objects, such as tires at distances of 200 meters or more. How are you achieving the performance Honda is seeking in a single-chip?

Asghari: One of the key things that the automotive industry looks for is the ability to see a piece of tire at longer distances. Honda was telling us, no LiDAR company in the industry can see a piece of tire at a distance of 200 meters. We were the only company that has performance good enough to see a tire on the road, or a pedestrian at 300 meters away who is wearing black clothing or other similar scenarios.

When providing LiDAR for an autonomous vehicle, you want to be able to detect a child that may be behind a parked car that’s about to jump in front of you to collect a ball. Those types of random scenarios. You want to see them 300 meters away so you have enough time to stop the car. So we had the ability to deliver the performance, but also the complexity needed at a scale so that they could manufacture the product cost effectively. Automotive OEMs have highly precise specifications on volume, on quality qualification and pricing. We demonstrated to Honda that we can deliver on all the key metrics that they were looking for.

P&IT: How does the design of FMCW LiDAR overcome some of the environmental challenges that time of flight LiDAR could be susceptible to?

Asghari: While there are adequate time of flight LiDARs that perform exceptionally well, many of them are susceptible to cross-talk and interference from other LiDAR systems or even reflections or certain lighting within the environment that they’re operating in. FMCW LiDAR is coherent and offers resistance to background radiation or environmental changes. It could be a sunny day or pitch black night and it does not matter. FMCW LiDAR performs equally well under all weather and lighting conditions, because you do not have to go through perception processing for it to detect and object and understand its motion. The perception of motion and the detection of the object and image recognition is instantaneous. If an object or person is moving, FMCW LiDAR captures that motion instantly.

Every pixel within an image has a velocity vector associated with it. So if there is a child behind a parked car the FMCW LiDAR sees it immediately, there’s no delay in waiting for frames to be processed. That can save milliseconds of precious time and provide the type of motion detection and instant object recognition that is necessary for an autonomous navigation system or application.

P&IT: Focusing on autonomous vehicles. What are some of the components, systems or technologies that need to be further developed or improved upon to enable the use of FMCW LiDAR in future autonomous vehicles? Are you working with any partners on digital processing or scanning or other enablers of autonomous navigation?

Asghari: When we consider the essential components that enable an FMCW LiDAR, there are three that are essential. First is the photonics, which we’re the masters of. Secondly, is digital processing, and we do design and build some of those electronics that interface with the optics internally. As an example, we design and build all of our own analog integrated circuits. However the digital processing itself is something we allow our partner AMD to manage. AMD has been great supporters of our operations, especially by providing customization and with their system on chip (SoC) architecture. Typically, most developers think of FPGAs as being power hungry and expensive. The SoC that AMD is providing is designed in a way that it is a DSP, processor and an FPGA all in one chip. The hardware is no longer overly expensive and power hungry, and they’re also providing additional processing cores as necessary. We can write all the programs in C and do all the processing we need in a flexible easy way.

The third major component of FMCW LiDAR is scanning, or scanning the beam in different directions. That is an area where we have a lot of development work happening in SiLC as well as developing an ecosystem of developers around us. We’re currently working with several other scanning suppliers and creating ecosystems where we can provide reference designs and collaborate.

In the future, we anticipate scanning will also be integrated into the SoC design, with all of the scanning occurring on chip.

This article was written by Woodrow Bellamy III, Senior Editor, SAE Media Group (New York, NY).