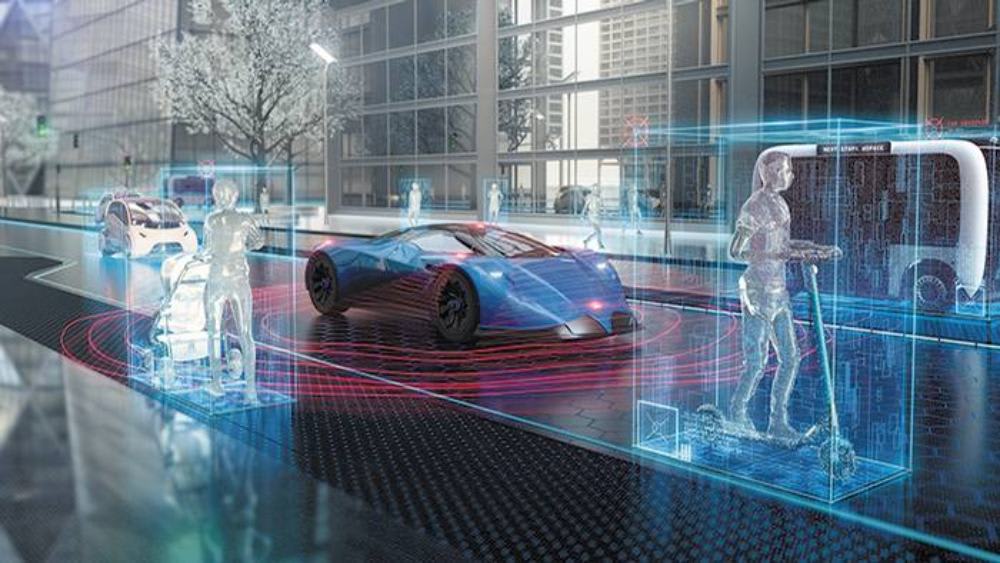

An autonomous vehicle needs to be trained to recognize its surroundings.

That calls for Data. And lots of it, as a self-driving car "learns" about its environment.

The need to recognize nearby cyclists and pedestrians, however, means that automotive engineers potentially have access to petabytes of faces and license plates.

How is personal information protected on an autonomous car's data-collection course?

In a live Tech Briefs presentation this week titled Data-Driven Development and Breakthroughs for New Mobility Technologies, a reader had a question for the co-founder of a company that supplies training and validation data for autonomous vehicles:

"What data privacy safeguards need to be integrated as data increases within automated vehicles?

Recently, in 2018, the E.U. discussed modern vehicles as a mobile device, and how that data belongs to the vehicle owners. If data-privacy regulations, like the E.U.'s General Data Protection Regulations , requires client consent for data use, how can this fit with modern A.I. vehicles?"

Read the edited response from Philip Kessler, CTO of understand.ai, based in San Jose, CA.

Philip Kessler: First, we probably need to differentiate between two types of personal data. The first type is the data which is recorded and collected when you’re using the car by yourself — for example, data like the kind of music you’re listening to, so you can receive smart recommendations like what you get on Spotify and Pandora.

The second type of data is if you're an external party, like a pedestrian walking outside or a cyclist riding your bicycle outside of a car. This data collection is from an autonomous car, driving around you and collecting data, recording your face and where you’re going.

Users normally deal with the first type of data, just like the terms and conditions you have when you're using an app or a website. This is the easier case.

The more challenging case is for all the people who are outside of the vehicle who are getting recorded. This was a big challenge last year and in 2018 for most of our customers. Some companies only recorded data at night, which is very interesting or even almost funny, to prevent recording recognizable faces of pedestrians. Driving at night is obviously not scalable.

By now, there are kind of two approaches, which we see in industry. First, the better option: Companies are anonymizing the data. They are using deep-learning algorithms, and they have software available to anonymize faces and license plates, to make the data GDPR compliant. The faces and license plates are automatically removed, so the personal data cannot be identified anymore.

Obviously, you don’t have to throw away the face and license-plate information for your deep-learning training. You still need that raw, un-anonymized data for training the algorithm, but you just have to make sure that a human engineer or A.I. engineer sees the anonymized data where the faces and licenses are blurred or pixelated.

The second option: Other companies are basically printing an email address or phone number on the data-collection course. You can call them and ask for your deletion, which is a very challenging process, because it's very hard to find pedestrians in the petabytes of data. Normally, they'll just delete the whole data set for the entire day, which is not very scalable. We think the better approach to comply with regulations and privacy is to anonymize the data and remove personal information from the recordings.

What do you think about Data Security Concerns with Self-Driving Cars?

Share your questions and comments below.