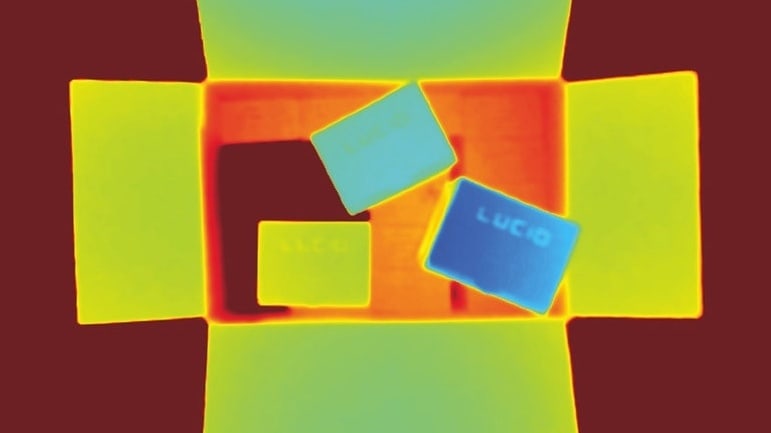

A $500 nano-camera that can operate at the speed of light has been developed by researchers in the MIT Media Lab. The three-dimensional cameracould be used in medical imaging and collision-avoidance detectors for cars, and to improve the accuracy of motion tracking and gesture-recognition devices used in interactive gaming.

The camera is based on “time of flight” technology like that used in Microsoft’s recently launched second-generation Kinect device, in which the location of objects is calculated by how long it takes a light signal to reflect off a surface and return to the sensor. However, unlike existing devices based on this technology, the new camera is not fooled by rain, fog, or even translucent objects.

In a conventional time of flight camera, a light signal is fired at a scene, where it bounces off an object and returns to strike the pixel. Since the speed of light is known, it is then simple for the camera to calculate the distance the signal has travelled and therefore the depth of the object it has been reflected from. Unfortunately though, changing environmental conditions, semitransparent surfaces, edges, or motion all create multiple reflections that mix with the original signal and return to the camera, making it difficult to determine which is the correct measurement.

Instead, the new device uses an encoding technique commonly used in the telecommunications industry to calculate the distance a signal has traveled. The idea is similar to existing techniques that clear blurring in photographs. The new model, which the team has dubbed nanophotography, unsmears the individual optical paths.

The camera probes the scene with a continuous-wave signal that oscillates at nanosecond periods. This allows the team to use inexpensive hardware — off-the-shelf light-emitting diodes (LEDs) can strobe at nanosecond periods, for example — meaning the camera can reach a time resolution within one order of magnitude of femtophotography while costing just $500.