When you're testing the stability of a rocket, you can't just fire up a design.

Factors like temperature, pressure, and velocity impact a rocket's journey, and the parameters must be examined and modeled.

Even computational simulations are done for weeks or months with a supercomputer, given the stakes of such a complex and explosive application.

To speed up the design process, researchers from the University of Texas are finding more efficient ways to predict, or "learn," a rocket's behavior.

The system, developed at the Oden Institute for Computational Engineering and Sciences and and the Massachusetts Institute of Technology, uses "snapshots" of physics data to create reduced-order models — simulations that run very quickly and are therefore valuable for rocket builders looking to try out a variety of designs.

“In some important cases, these reduced-order models are the only means by which one can simulate a large propulsion system," said Ramakanth Munipalli, senior aerospace research engineer in the Combustion Devices Branch at Air Force Rocket Research Lab . "This is highly desirable in today’s environment where designers are heavily constrained by cost and schedule.”

{youtube} https://www.youtube.com/watch?v=fnT__yycb34 {/youtube}

The "scientific machine learning" approach, led by Oden Insitute director Karen Willcox, provides makers of rockets with a fast way to assess engine performance in a variety of operating conditions.

The snapshots, generated by an Air Force combustion simulation code known as General Equation and Mesh Solver (GEMS), serve as the training data for the system and the reduced-order models it produces. Each picture represents a variety of launch parameters, including pressure, velocity, temperature, and chemical content.

While the GEMS "training" takes about 200 hours of computer processing time, the reduced-order models can run the same simulation now in seconds, and can be run in place of GEMS to issue rapid predictions.

Beyond repeating the training simulation, the models also can predict how the combustor will respond to operating conditions that were not part of the data set.

Although not perfect, says Willcox, the models do an excellent job of predicting overall dynamics, and are particularly effective at capturing the phase and amplitude of the pressure signals — key elements for making accurate engine stability predictions.

“These reduced-order models are surrogates of the expensive high-fidelity model we rely upon now,” Willcox said. “They provide answers good enough to guide engineers’ design decisions, but in a fraction of the time.”

In an edited interview via email below, Director Willcox tells Tech Briefs about her team's efforts to quantify uncertainty for a system as complex as a rocket engine.

Tech Briefs: What is your simulation method doing better than alternative rocket design software?

Karen Willcox: We are addressing computational cost. To make good design decisions, engineers need good predictions of how the engine will respond. For example, we need to be able to understand how a change in injector geometry will alter the complex flowfield inside the injector.

Tech Briefs: What models are currently available? What are the drawbacks of traditional rocket design/simulation methods?

Karen Willcox: The community has high-fidelity computational models that aid in making these predictions, but those models are so complicated that they take a very long time to simulate. A single analysis can take days or weeks on a supercomputer. For the engineer that needs to study thousands of different possible design configurations (i.e., thousands of different analysis runs), this is clearly not practical. Our reduced-order models aim to provide answers much faster — in times fast enough to be useful in the design setting — while still retaining high levels of fidelity.

Tech Briefs: What is the training data, and where is that training data coming from?

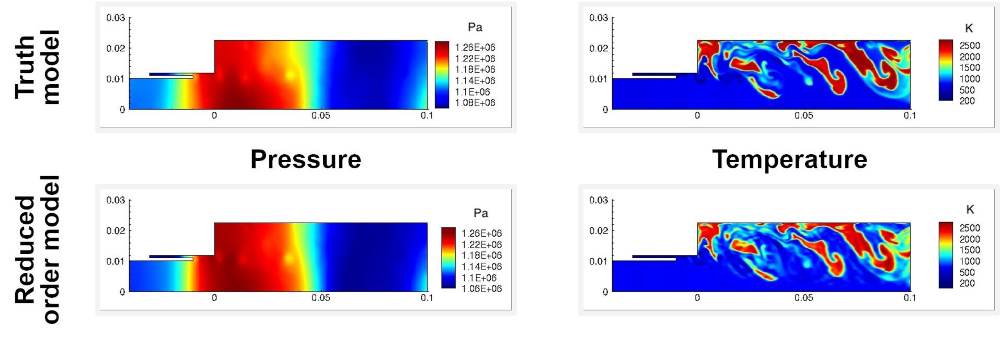

Karen Willcox: The training data are solutions coming from a high-fidelity computational model; we call them "snapshots." This high-fidelity model is the model that we trust (sometimes called the "truth model"), and our goal is to get predictions that are close to as good as this model, but solved in a small fraction of the time. In our case, the high-fidelity model is a code called GEMS that was developed by Purdue University and is used by the U.S. Air Force.

Tech Briefs: Can you take me through what a designer can learn from an example “snapshot?"

Karen Willcox: A snapshot is a glimpse of what is happening inside the engine at any point in time. A designer might look at the temperature profile to, for example, see pockets of heat release leading to regions exceeding a desired maximum temperature. Or they might look at the velocity field to see the character of the recirculation zone. Our reduced-order model can't recover all the fine details of a snapshot, but it captures enough of the general behavior to give the designer a good sense of what is going on.

Tech Briefs: What is the system “learning” exactly?

Karen Willcox: The system is learning a reduced-order model. The way we formulate the problem, this amounts to learning several matrices that define a dynamical system. This dynamical system is what we solve to get our reduced-order model predictions.

Tech Briefs: Is the system truly able to “learn” such complex physics?

Karen Willcox: If we were using standard machine learning (e.g., a neural network model), then my answer would be "most probably no." Standard machine learning is extremely reliant on having a large amount of training data. We don't have that; remember, we have to run an expensive model to generate training data.

What's more, even with a lot of training data, we would have no idea how a standard machine learning model would generalize — i.e., we would have no idea how good it would be at predicting conditions that weren't in the training data.

Tech Briefs: So what are you doing differently?

Karen Willcox: Two main things: We are embedding the physical governing equations, and we are exploiting the mathematical properties of projection onto a low-dimensional subspace. Without getting into details, this amounts to using the governing equations (conservation of mass, momentum, energy, and chemical balance) to identify structure, and then we reflect this structure in the form of the model we learn. The result is a method that enjoys the flexibility and ease-of-use of a non-intrusive data-driven machine learning approach together with the prediction power of a physics-based model.

Tech Briefs: What is most exciting to you about this system?

Karen Willcox: Most exciting to me is the way that this work blends machine learning and physics-based modeling. Machine learning is so powerful and has had many high-profile successes, especially in the retail and Internet worlds. But when it comes to complex problems in engineering, we can't afford to use models for which we have no idea of the quality of predictions— the consequences of being wrong are just too high. I like to say, " Big decisions need more than just big data, they need big models too ."

Of course, none of our models are perfect, but as engineers we think carefully about the uncertainties in our models and our associated confidence in the model predictions. We still have a long way to go to being able to rigorously quantify uncertainty for a system as complex as a rocket engine, but our approach that blends learning and physics is an important step to getting us there.

What do you think? Share your questions and comments below.

Transcript

00:00:00 it's such a different regime to making decisions about what you might buy next on Amazon and what movie you might want to watch next when the decision maybe relates to personalized medicine or to designing a rocket or designing an airplane let's go back now to the ischium TV classroom I'm delighted to be joined by Professor Karen Wilcox who's talking about physics based machine

00:00:24 learning Karen thanks so much for joining us it's good to be here perhaps you could tell us to start with what do we mean by predictive data science so predictive data science it's really the notion that we have a tremendous opportunity to harness the explosion of data that's happening across the world but particularly in applications across science engineering and medicine but in

00:00:44 these in these areas where the decisions are really high consequence we were making decisions where literally people's lives are at stake that a purely data-driven approach pure data science hunting the data is not enough we really also need to build in domain knowledge to harness the incredible predictive power of physics based models of governing equations of the laws of

00:01:04 nature and this is really the domain of computational science and engineering and mathematical modeling so predictive data science is the notion that we need a convergence we need to bring together these two fields the data science the data-driven perspective together with a very rich set of mathematical models and methods and computational science and engineering and that's what you're

00:01:22 demonstrating with this this part of the slide right so this is part of the sliders really showing there this is not a new idea in fact this is an idea that's been at the heart of applied mathematics and computational science and engineering for many years what's changed is that we're now in a world where there is so much more data than ever before data that we can generate by

00:01:43 simulations but also data that we can collect from our physical systems and so really one of the very exciting questions and opportunities is how do we take all these these areas from applied mathematics areas that are represented very well at the SEM meeting here this week how do we take them bring their theory to bear on very important questions and machine learning and in

00:02:04 predictive data science but also rethink some of what we do in the face of these volumes of data and how do we do that so there are many ways and again there are many talks this week that are really showing this that's in two plane interfaces my own area of research is in projection based model reduction which is what I'll talk about tomorrow which is really the

00:02:23 idea of taking data from very large scale expensive simulations like this this example here of a rocket engine we're generating simulations of the rocket engine takes hours hundreds and hundreds of hours to generate and so using perspectives again building in the mathematical models the laws of physics but putting that together with a machine learning perspective on learning from

00:02:46 data we can build what we call reduced order models so these are much simpler models that capture their approximate but they capture just enough of the physics to be able to run those simulations much much faster and then that's of course very critical when it comes to for example making design decisions where you don't want to analyze just one rocket but you'd really

00:03:05 like to be able to study thousands of different configurations before making a decision and where do you see this field progressing in the future what's next as we see data science and machine learning come to bear on very important applications and science engineering and medicine or again those decisions are high consequence it's such a different regime to making decisions about what

00:03:28 you might buy next on Amazon and what movie you might want to watch next when the decision maybe relates to personalized medicine or to designing a rocket or designing an airplane we have to think about how to how to make those those predictions certified and then I would add a second really important piece for the future is to think about education and so so much of our

00:03:48 education is really structured around our traditional lines of applied math computer science and engineering and what we see is that something like predictive data science really sits at the interfaces it's something that needs to weave together the perspectives of physical modeling and engineering models with the theory of applied math and then the power of computer science and

00:04:07 machine learning and so thinking about how we educate students for their future and interdisciplinary programs again I think it's a really important issue well it's great to discuss all of that with you Karen thank you so much for joining us thank you