After hurricanes and other disasters, it is becoming more common for people to fly drones to record the damage and post videos on social media. Those videos are a resource for rapid damage assessment. By using artificial intelligence, researchers are developing a system that can automatically identify buildings and make an initial determination of whether they are damaged and how serious that damage might be.

Current damage assessments are mostly based on individuals detecting and documenting damage to a building; however, that can be slow, expensive, and labor-intensive work. Satellite imagery doesn’t provide enough detail and shows damage from only a single viewpoint — vertical. Drones, however, can gather close-up information from a number of angles and viewpoints. It’s possible, of course, for first responders to fly drones for damage assessment but drones are now widely available among residents and routinely flown after natural disasters.

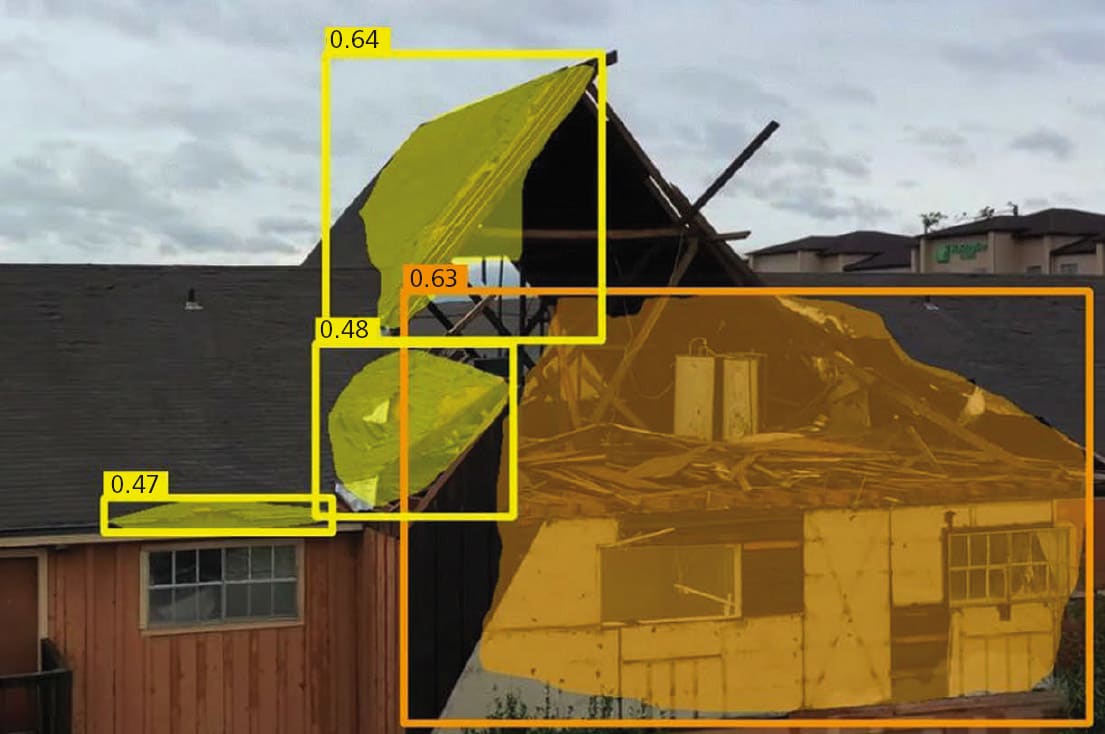

The initial system can overlay masks on parts of the buildings in the video that appear damaged and determine if the damage is slight or serious, or if the building has been destroyed. The researchers downloaded drone videos of hurricane and tornado damage in Florida, Missouri, Illinois, Texas, Alabama, and North Carolina. They then annotated the videos to identify building damage and assess the severity of the damage.

The resulting dataset — the first that used drone videos to assess building damage from natural disasters — was used to train the AI system, called MSNet, to recognize building damage. The dataset is available for use by other research groups via Github.

The videos don’t include GPS coordinates yet but the researchers are working on a geolocation scheme that would enable users to quickly identify where the damaged buildings are. This would require training the system using images from Google Streetview. MSNet could then match the location cues learned from Streetview to features in the video.

For more information, contact Byron Spice at