DARPA’s Mind’s Eye Program aims to develop a smart camera surveillance system that can autonomously monitor a scene and report back human-readable text descriptions of activities that occur in the video. An important aspect is whether objects are brought into the scene, exchanged between persons, left behind, picked up, etc. While some objects can be detected with an object-specific recognizer, many others are not well suited for this type of approach. For example, a carried object may be too small relative to the resolution of the camera to be easily identifiable, or an unusual object, such as an improvised explosive device, may be too rare or unique in its appearance to have a dedicated recognizer. Hence, a generic object detection capability, which can locate objects without a specific model of what to look for, is used. This approach can detect objects even when partially occluded or overlapping with humans in the scene.

This work was done by Michael C. Burl, Russell L. Knight, and Kimberly K. Furuya of Caltech for NASA’s Jet Propulsion Laboratory.

The software used in this innovation is available for commercial licensing. Please contact Dan Broderick at

This Brief includes a Technical Support Package (TSP).

Detection of Carried and Dropped Objects in Surveillance Video

(reference NPO48851) is currently available for download from the TSP library.

Don't have an account?

Overview

The document outlines a technical support package from NASA's Jet Propulsion Laboratory (JPL) detailing a software prototype developed for detecting carried and dropped objects in surveillance video. This research was part of the DARPA Mind’s Eye Program, which aims to create systems that autonomously monitor video inputs and generate human-readable descriptions of activities.

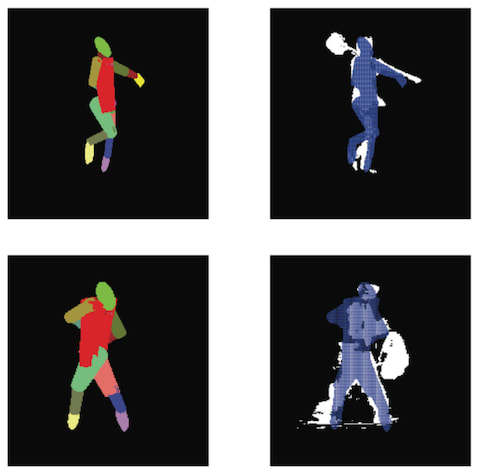

The primary challenge addressed by this prototype is the detection of objects carried by humans, which can be difficult due to their often partial occlusion, variability in appearance, and the fact that they may be too small relative to the sensor's resolution. Unlike well-defined object classes such as faces or vehicles, carried objects can vary widely, making traditional recognition methods inadequate. To tackle this, the researchers developed a generic object detection capability that focuses on segmenting foreground pixels from the background in video footage.

The methodology involves using human detection and pose estimation to create predicted silhouettes of individuals in the scene. By comparing these silhouettes with the identified foreground regions, the system can identify potential carried or dropped objects. The document describes the use of a connected components algorithm to analyze the foreground pixels after masking out the projected human silhouettes. This process helps in identifying bounding boxes around potential objects, although it notes that some filtering could improve accuracy by removing small or spurious detections.

The document also highlights the importance of refining the detection algorithm. Suggestions for improvement include incorporating a silhouette term into the optimization process for adjusting joint angles, which could enhance the accuracy of the 2D skeleton used in the detection process. Additionally, leveraging temporal information through tracking could help reduce false positives.

In conclusion, this memo presents a significant advancement in the field of object detection within surveillance video, emphasizing the need for robust methods to identify a broad range of objects that are not easily recognized by conventional means. The research conducted at JPL underlines the potential for this technology to be applied in various contexts, enhancing surveillance capabilities and contributing to safety and security measures.