Nowadays, there are many technologies available that are used to quantify the motion of humans and animals. However, recent innovations in optical motion capture systems have added unprecedented capabilities, allowing the same program to be used by clinicians to assess walking deficiencies, i.e., gait analysis, of their patients; by movie animators to quantify the subtle motions of groups of actors; by engineers to evaluate how workers interact with machinery; and by researchers to study the locomotion of animals and better design walking and flying robots.

Capturing Motion

Vicon’s motion capture systems use specialized software and proprietary high-speed and high-resolution imaging cameras to determine the three-dimensional coordinates of moving markers. These markers can be discs, hemispheres or spheres that are covered with retro-reflective coatings. Infrared LEDs ringing the cameras cause these markers to contrast with the background, thus permitting the system to detect their positions in real time. Typical applications encircle four or more cameras around the space where the action is to take place. From one to hundreds of retro-reflective markers are attached onto the subject at the locations of interest to be tracked, and their positions are computed to subpixel accuracy within each camera. The cameras then transmit data to the host computer via gigabit-Ethernet delivering real-time data access to data from all cameras. With these coordinates, triangulation algorithms compute the 3-D positions of every visible marker. Sophisticated template matching routines are then used to create frame-to-frame marker paths over time and solve occlusion problems.

Vicon’s Nexus software can work in real time, permitting immediate visualization of data, or in offline mode, recording the movement for later analysis. It has been designed to display data as they are collected: 3-D marker data, analog data, and reference video images, as well as the current system status. The operator can view reconstructed data in a 3-D workspace as the motion occurs, or view stored motions for review purposes. With Nexus, the operator configures separate windows to view video, Cartesian graphs, and animated figures in real time. Within this single software platform, slow or fast motions like head vibration, ingress-egress, airbag deployment, and seated operator task analysis can be examined live.

Nexus is designed to be straightforward to integrate into both large and small environments. The modular design of the components gives it a completely scaleable architecture, permitting more capabilities to be added as needed. For example, an optical capture system with three cameras has been used to track the limb movements of fetal rats to study the development of their neural connections and reflexes under the influence of various drugs. The rat pups are roughly the size of a small jellybean and the markers used are 0.5 mm in diameter. Conversely, engineers developing artificial limbs typically use eight cameras when analyzing walking gait, but will use up to 20 cameras for assessing the performance of their prostheses during cutting maneuvers or walking over irregular and inclined surfaces.

Configuration & Analysis

The combination of flexible camera layouts and simple calibration procedures enables the Nexus software to address multiple applications. Since the markers are small and do not require cumbersome wires or batteries, the collection setup is quick and efficient. Subsequently, the Nexus system provides the ease-of-use and accuracy that make it an effective technology for both biomedical and motion analysis.

Nexus requires periodic calibration to precisely determine where the cameras are positioned in space. Fortunately, the software permits this procedure to be simple and fast. A wand having reflective spheres set at known distances is waved throughout the movement space. Nexus then computes the camera positions, orientations, and lens parameters. Other software components supply methods to set up calculations from the marker coordinates, which can include simple angles, velocities, and accelerations. Vicon’s BodyBuilder module provides for more complex, full six-degree-of-freedom calculations such as mechanical energies, angular momentum, joint moments, and joint powers.

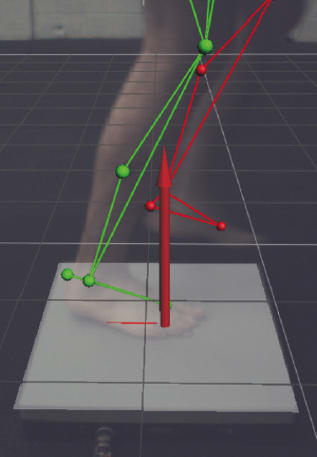

Vicon’s imagers come with differing resolutions to suit various capture environments, 0.3, 1.3, 2.0, and the 4.0 mega-pixels, with capture speeds up to 1,000 fps. This flexibility permits matching the camera speed and resolution to each application. The Vicon technology provides in-camera 10-bit grayscale processing instead of standard thresholding. With this additional information the cameras can more precisely circle fit markers and increase overall system accuracy, even though optical systems are typically noted for accurate measurements. The combination of illumination, cameras, and calibration and grayscale algorithms results in 0.01 mm or better accuracy and in precision exceeding 10 microns. Nexus also integrates digital reference video. When the video camera is connected to the PC, it is automatically recognized and is immediately available for capturing video. In addition, 3-D information can be overlaid on the video image; for example, the force plate ground reaction force vector or the calculated 3-D marker positions. This display is especially useful with patients for viewing non-kinematic variables (e.g. forces and pressures) along with their video.

Many applications require real-time feedback with minimal latency. Clinicians use real-time feedback with stroke patients to help them regain their walking gait more rapidly. It is used to feedback whether certain body positions and angles have been attained and to create a digital 3-D mirror image for viewing. For these treatments, patients often walk on a treadmill while harnessed to an overhead gantry. Nexus will automatically generate verbal instructions or encouragement depending upon how the clinician sets up the feedback criteria. In addition, the display can be set up so that the patient can view their movements as a 3-D animation with the body parts of interest highlighted to encourage appropriate focus. A distinctive benefit is that the objectivity of the feedback permits the clinician to concentrate on the patient and not the quality of the activity. Typically, Nexus can acquire the 3-D marker positions and apply biomechanical model calculations with a latency of 2 ms.

Three-dimensional coordinate data are often not sufficient to provide a complete assessment of the motion. Consequently, motion capture software can synchronously gather data from third-party devices including force plates, pressure sensors, data gloves, eyetrackers, electromyography (EMG), and other analog or digital devices. For example, motion capture cameras and force platforms are often used together to study walking gait of children with cerebral palsy. Using an inverse dynamics process with these data, equations can be developed to compute the forces, moments, and powers in the ankle, knee, and hip joints. Physicians use this information to determine the appropriate action, whether surgical or bracing, to correct the gait malady.

Utilization

A particular research project aims at developing prosthetic arms that can be controlled directly from the brain. Monkeys have been trained to perform various reaching tasks. Markers are placed on the monkey’s hand and arm and are tracked in real time. Simultaneously, cells in the monkey’s motor cortex are sampled and software determines if there is a correlation between a specific motion and the neural signal. Cells that correlate are then later used to specify the joint positions of a robotic arm. This combination of real-time cortical activity data and 3-D position data has allowed researchers to learn how a human brain may be equipped to operate a prosthetic limb.

This article was written by Douglas Reinke, Vice President – Life Sciences at Vicon, and Gerald Scheirman, Product Manager at Vicon. For more information, contact Mr. Reinke at