I interviewed Tom Doyle, CEO and Founder of Aspinity, Pittsburgh, PA, about their analog machine learning chip, the AML100 analog machine learning processor, which is designed to bring artificial intelligence to always-on IoT endpoints at a fraction of the power used by digital chips.

Doyle: Our technology provides what we call power-intelligent endpoints. That means we can deploy AI for systems in a very flexible way that doesn’t use a lot of power, and thus supports longer battery life. Or, on the other hand, you might want to add more computing for the same battery.

Our goal is to minimize data movement by using analog inferencing for an earlier determination of the data that is relevant to a particular application. The key for reducing the power load of those endpoints is to minimize the digitization and transmission of irrelevant data.

The traditional technology uses a sensor and an always-on analog to digital converter (ADC) that digitizes the analog data for further processing. But, for things that only happen occasionally, it really does not make sense to do it that way — you will consume way too much energy for a battery powered device.

Our analog machine learning chip, the AML100, changes the whole dynamic. You won’t need to digitally process all the data that you get from your environment. You can analyze your raw, unstructured analog data with the AML100 and it will tell you whether the data is relevant. Only then, will it wake up the ADC, digitize that data, and send it to a digital processor or to the cloud, for further analysis.

The physical world, the sensed world, is natively analog and it’s the physical sensor data we want to look at and understand. But processing that data has its challenges. For a given signal chain, the output you get for the input you put in is not always consistent. The reason is that you have factors like voltage and temperature offsets that build up over time — that’s been a challenge with analog for a very long time.

Our AML 100 chip, however, uses Python-based software to solve the problem of chip variability — that’s our real innovation. It supports the lowest always-on AI system power — not only is the chip power very low, but the system power is also very low because we don’t keep the ADC and other digital components of the system always on, processing data that is mostly irrelevant. By running inferencing in our always-on analog chip we can typically reduce the system always-on power by 100 times. This idea of insight-driven capabilities at very low power is what we call power intelligence.

Tech Briefs: If I want to put this into my smart home, am I going to be able to figure out how to program it?

Doyle: Typically, it will be delivered as a plug and play device, such as a glass break monitor that has our technology embedded in it.

Tech Briefs: Suppose I’m an industrial customer and I want to monitor a motor. What would happen next?

Doyle: Industrial is a little bit different because although we don’t want you to have to program everything, you should be able to to select parameters configured for that machine. So, you can’t do a one size fits all glass break detector on a machine — there has to be some flexibility to choose and set parameters.

You typically want to look at different types of information. If a bearing is starting to go bad, for example, you’ll get high frequency noise. As it gets worse, the harmonics will spread, and you’ll get down into the lower frequencies — it typically starts above 5 kilohertz.

To analyze that, we use what we call spectral monitoring. There will be a spike at some frequency when a bearing starts to go bad. Because all bearings are different, that frequency could be anywhere in the band. So, we have a spectral monitoring function that monitors the energy within eight different bands across the whole spectrum. We run it always-on and set a threshold within each band. As soon as something changes, we send an alert signal.

And there are also other things you can monitor — a velocity RMS in certain bands or peak-to-peak displacement. You can customize it for a particular motor.

Tech Briefs: Does the customer program it or do you program it?

Doyle: We want the customer to be able to program it — it’s easy to do, since it’s Python-based. “I want to use spectral monitoring, download, and run the machine. I read the steady state values and I’ll set a threshold, maybe 10% higher in each band. I watch it for a minute, then set my values, and walk away, with the instruction to let me know if any of these frequency bands exceeds my threshold.”

Tech Briefs: Could you tell me about the architecture of your chip.

Doyle: The architecture of our AML 100 chip is not much different than you might find in any another MCU or DSP. We have a core, we have digital and analog I/O. We have an SPI controller so you can communicate from chip to chip to chip to an MCU. We have built-in power management and all the peripherals. A digital chip, like an MCU, has an ARM core, or a RISC core, and uses digital logic. However, ours is an analog core — an array of what we call configurable analog blocks (CABs).

The idea is that you use what you need — you use the CABs as building blocks. And even inside the blocks, there are further parameters that you can choose for building a signal chain. It’s a little bit different from the digital world, but you’re still going to build a signal chain from the input to get the information for your decision. This is all configured with software, much like a digital core, so you don’t have to be an analog expert.

We also have a key infrastructure piece we call analog nonvolatile memory, which you can’t directly access, but it’s in every one of the CABs and allows us to do things such as setting a parameter like the center frequency of a filter.

We also have an analog neural network — we have multiply accumulates and they have weights that have to be stored, so we use our analog nonvolatile memory to store them.

To sum it up, we have a proprietary floating gate nonvolatile memory that stores analog values. Our CABs support an assortment of circuit functions and parameters. You can create a bandpass filter, for example, and store those values. You then start to extract features, in this case an envelope detector, and attack and decay rates, and you use it to store your neural network weights. In that way, you build a complete signal.

But you don’t really have to know all this as a designer. You build your signal chain, test it, and then hit “compile.” When it compiles down into the chip, it stores everything in the nonvolatile memory and makes the desired adjustments — and it’s all done with Python-based software.

Figure 3 is a view of our glass break detection. There are lots of different noises in your home. So, you don’t want to be triggering on things that are not a glass break. You’ll get a constant feed of audio information. Sometimes you’ll have a glass break, and you’ll see it in the label, and we’ll get a detection, and sometimes you don’t, even though it’s a pretty loud noise — how do we decide?

Basically, we’re using different spectral energies, different SNR estimates, different zero crossing rates. These are features in different frequency bands that we push into our neural network to make a decision. “That was a glass break, wake up the MCU; that was not a glass break, everybody, calm down.”

When you look at the power to do that: an analog microphone is typically about 100 microwatts, our AML 100 constantly monitoring sound for a glass break is 25 microwatts. So, all told, we’re running at 125 microwatts compared to typical AI-systems that are always-running in the mWs range. A battery can last five years with this reduced load.

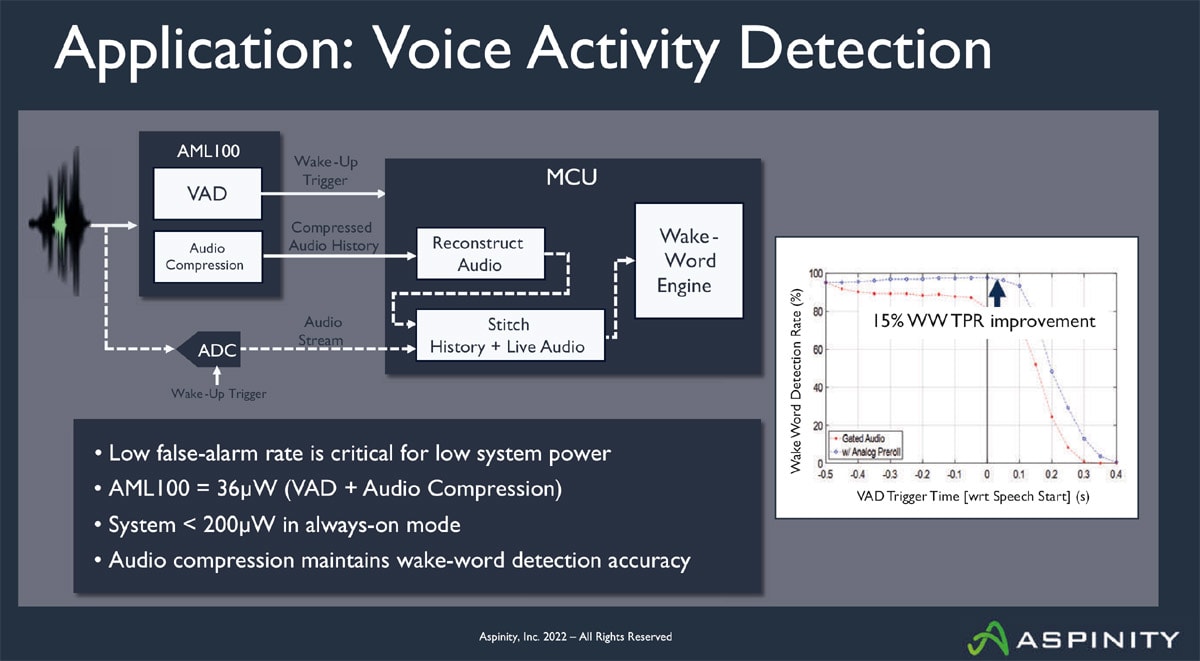

Voice activity detection is another example. (See Figure 4). Remember we have parallel paths inside the AML100. In this case we’re doing two independent things at the same time. Much like our glass break algorithm, we’re running a voice algorithm, that’s always on, that’s always monitoring for someone speaking. We’ll wake up the system when we detect someone speaking — not a dog bark, not a break in a window, not music, but only when someone’s actually speaking.

We also do audio compression (which is another patent we have) because sometimes when someone says the wake word, they quickly say “I want to add milk to my shopping list” — and by that time a little bit of information may have been lost. We have a novel way to capture that by using our analog audio compression to store a history of the audio — it’s called pre-roll. When there is a wake-up trigger the history can be retrieved, reconstructed, and combined with the live audio to provide a complete message to the wake word engine and cloud.

The AML 100 doing both of those things at the same time uses about 36 microwatts — extremely low power to be always on waiting for someone. So, imagine, going back to the example of these devices running in your home 24/7 listening to all the audio. Now we can turn that off for the 80 percent of the time when there is no speech and we can save the world a lot of energy, because there are 180 million of these devices running right now consuming milliwatts of power 24/7.

Power Intelligence

The key is what we call power intelligence: a system-level solution that uses analog inferencing to deliver always-on endpoints that use very little power.

This article was written by Ed Brown, editor of Sensor Technology. For more information go here .