With the Internet of Things (IoT) quickly rising in front of us, it would be easy to get caught in the trap of thinking that both the trajectory and applications will be somewhat predictable. While hindsight is 20/20, the future can be a little trickier to envision. We can likely all agree that when we saw the first brick-sized wireless telephone, it wasn’t hard to tap our creative problem solving to conclude that subsequent generations were going to get smaller, cheaper and generally “better”. But few people could have envisioned the “smartphone,” let alone Apple’s first edition iPhone. Fewer still looked from that first brick to an age of sensor-driven, cloud-connected apps in the palms of our hands.

Small Data Drives Big Data

One obvious answer is from our activities and behavior, led by the mobile devices we are carrying with us. Traffic reporting apps, such as Google or Waze, make use of our data every day. Our “likes” on everything from a local eatery to geo-political opinion, especially when cross-referenced with our location record, creates a vast behavioral database that everyone from friends to retail coupon pushers can capitalize on. The last and somewhat unsung heroes of the IoT revolution will be the sensors. Beyond GPS, the missing link in the big data pie is a broad, but granular, understanding of what’s going on in the spaces we occupy. Sensors will bring awareness to the Internet of Things, and lighting is a natural host for the awareness.

Phase I: A Platform is Born

While the concept of connected lighting doesn’t sound particularly innovative, the reality is that very few of today’s lighting installations can really be called “connected”. A modern building management system (BMS) may be able to command banks of lights on or off on a preset schedule, track the overall trend of occupancy sensor triggers in a series of spaces, and maybe even infer the amount of energy usage/savings that those events trigger, but things pretty much stop there. In reality, prior to the start of the solid state (or LED) lighting revolution, there really wasn’t much to control beyond on and off.

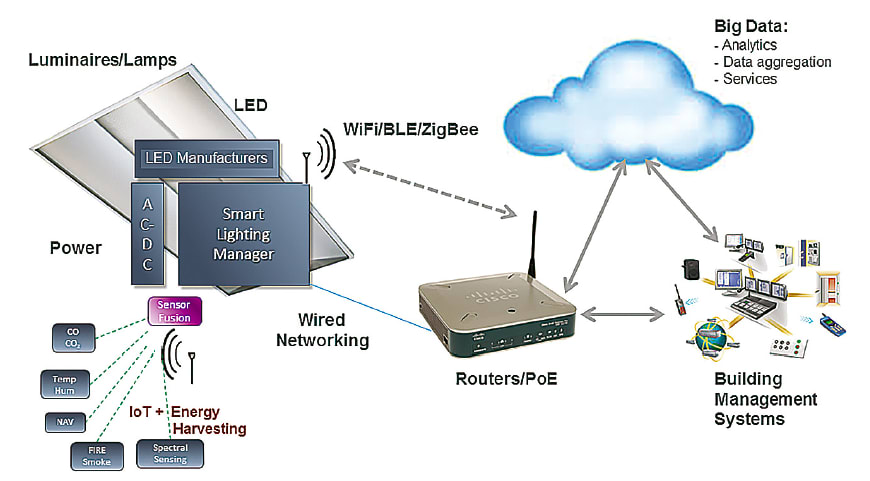

Recent photonically-integrated chip level solutions (Figure 2) are enhancing both the capabilities and connectedness of our lighting, providing a preview of the coming tsunami of sensor driven awareness that will not only deliver information on lighting levels and energy usage, but a raft of building-level data that will encompass everything from air quality and space utilization to the wearable-transmitted vital signs of users in those spaces.

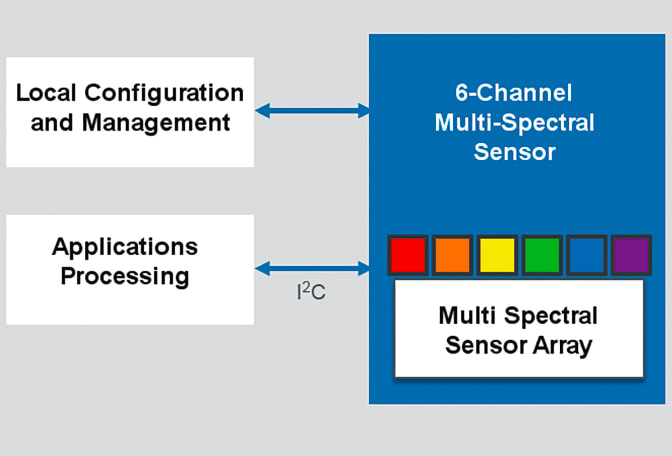

To facilitate sensor extensibility when architecting an IoT sensor hub (Figure 3), industry standard platforms should be considered. In the example above, I2C has been chosen for core expansion. Simple UART-based connectivity, such as RS-485, can also be employed. The need for distributed intelligence must also be considered, and will be driven by the frequency and volume of the sensor data being captured. Whether you look at it as ‘sensors, with intelligence thrown in at no extra charge’, or vice versa, the simplest approach for widely distributed sensing will be a single dedicated controller which includes one or more integrated sensors. With the addition of basic wired or wireless connectivity, an IoT star is born!

The convenience of lighting as the initial sensor platform is undeniable. An existing system lighting is in place now, it’s in a convenient physical location to both provide it with power and to enable useful granularity in the data that comes from the sensed environment, and there is a convenient benefit in the form of integrated sensing and control to provide a payback in the form of basic energy savings.

Phase II: Extend the Platform to New Applications

There is an incredible amount of information in the light, and a wide array of bandwidths to choose from. The smart lighting manager used in the initial illustration is equipped with nanophotonic tri-stimulus XYZ interference filters that respond to the visible spectrum as the human eye does (Figure 4).

Some applications in visible and near- IR include food quality assessment, chemical product safety (detecting urea or melamine in milk, for example), authentication, signal detection and medical/SpO2. Mid-IR applications include bio-sensing such as glucose monitoring, fat/cholesterol detection, and alcohol. Longwave IR (PIR) integration would require some distinct material design, but would allow sensing to extend into fire/safety as well as presence detection and people counting.

In speaking to one of the original committee members of the TCP/IP protocol, they pointed out that while they never conceived that cameras would be connected to the network, they did envision that there would be things they couldn’t envision. A photonics platform approach must similarly account for that which cannot yet be accounted for. We might not know what the app is, but we do know we need to support it.

This article was written by Tom Griffiths, Marketing Manager – Sensor Driven Lighting, ams AG (Raleigh, NC). For more information, contact Mr. Griffiths at