Developing software for small-scale embedded applications is different from developing large-scale software applications. Large-scale applications use commercially available ‘one fits all’ software development solutions that are difficult to scale downward and usually miss the desired process goals. In many cases, developing a small-scale software application development process within an existing corporate environment is quicker, less expensive, and results in superior developer productivity and product quality.

Software pioneer Grady Booch famously commented that, “Building quality software in a repeatable and predictive fashion is hard.” This statement not only describes the difficulty of the software development process, but also describes the primary goal of any software development process — software products should be defect-free, maintainable, and have veracity requirements to guarantee successful operation.

Software development processes can be fully described by four orthogonal views: methodology, process artifacts, process procedures, and quality assurance. This article will focus on the methodology view.

Analysis Phase

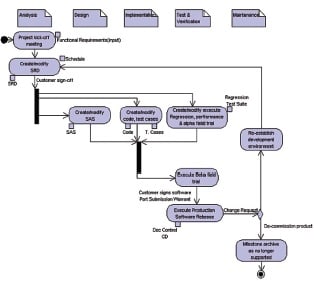

The small-scale application development methodology is best described as a use-case-driven “hybrid spiral” (part waterfall, part iterative). The analysis phase is the waterfall portion of the hybrid. The ability to perform this upfront analysis in detail is a unique advantage of small-scale development.

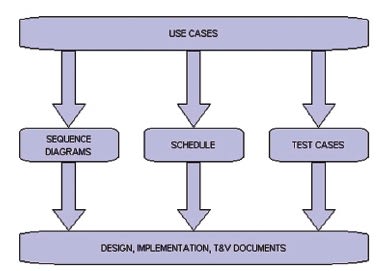

The cornerstone artifact of the analysis phase is a software requirements document (SRD) that details the requirements in textual use-case sequences, top-level state description, and a hardware interface list. This is an artifact that is maintained throughout the product lifecycle. The product SRD largely consists of textual use-case sequences, which drive the rest of the development (i.e. object identification via sequence diagrams, test cases, and schedule).

Avoid any process of requirements discovery that includes partial design and implementation of the software product; this is very inefficient. Changes in the requirements will occur, prompting updates to the SRD, but an efficient development process means that the designers should make every attempt to unambiguously define the requirements of the customer up front. The process should also accommodate existing processes within the design company, including manufacturing, quality assurance, marketing, and engineering requirements.

Earlier, small-scale application development was defined as following a waterfall and iterative process. An iteration is the software development of one or more use-case scenarios. A schedule, developed as part of the analysis, defines the order of iterations for the implementation and test phases of the process. This order is decided based on customer needs, risk identification, and the importance of each use to the end product. Designers should schedule uses that contain risk items first, using functionality as the variable, and commit to delivering product on time.

For schedule documentation, spreadsheets work fine: one worksheet describes use case delivery, while a second details design, implementation, and verification efforts for each object as they are identified. Once the initial schedule is developed, modifications take less than five minutes per week.

Design Phase

The design is documented in a System Architecture Specification (SAS). In addition to the architecture design, this document details public-member definition of all objects; the development environment, including tool list and installation instructions; design notes; diagnostics for quality assurance procedures; and performance monitoring. The SAS and SRD documents are the only two documents that need to be maintained throughout the product lifecycle. It is the go-to document for a maintenance programmer.

Design documents are notorious for being out of date with the actual implementation. This is avoided by using the SAS for a high-level view of the design, which includes the architecture and a description of each object. Detailed designs of complex individual objects and sequence diagrams for object identification should be documented separately. Designers then can discard these documents after peer reviews and implementation are complete.

The architecture also should include a state diagram that details a brief description of operations visible to the user, including each associated object. When multiple programmers are involved with object assignments, create public members for the object interfaces for use by other team members.

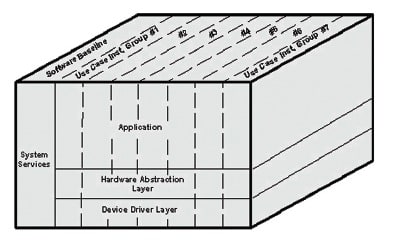

The software baseline (or system services) portion of the application architecture and an object diagram should be the focus of the first SAS iteration. This design provides the software skeleton from which all object modules will derive services and execution. As changes to the SAS occur, each use-case-driven iteration starts with additions and modifications to the SAS document.

Implementation Phase

Implementation encompasses coding, unit testing, and test-case definition activities. As each iteration passes through the implementation phase, only code each object to support the current iteration. In this way, the designer can develop the use-case iterations and object refactoring as needed and keep complexity to a minimum.

During the test-case phase, developers should map each test case to the SRD use-case scenarios. Because the group may have to develop several test cases per iteration, it is critical that the test-case definitions be generated from analysis of the SRD use-case scenarios and the coding implementation. This has to be done during the implementation of the objects while all facets of the objects function are in the minds of the developers. Additional support or hooks may have to be added to appropriate modules and/or software baseline to support the execution of the use cases. At the end of the implementation phase, the complete test case portfolio forms the basis of the regression test used in test and verification.

Test and Verification (T&V) Phase

While T&V can include the hardware platform, that activity is out of scope from the software development itself and, therefore, is beyond the scope of this article. T&V includes regression testing, performance testing, and alpha and beta field trials.

A regression test is the execution of the test cases generated during the implementation phase. An iteration is not considered complete until the associated test case has been added to the regression test suite and the regression test suite is executed successfully. A communication port on the target is used to facilitate the regression testing. A scripted, automated test suite implemented on a port controller (i.e. PC utility) is preferable. The regression test is critical to product quality throughout the software products lifecycle.

The performance of the code should be measured prior to each software release to make sure the latest code development has not negatively impacted the application. At a minimum, the performance measurements should be:

- Memory Usage. A minimum of 10 percent of code and data memory for each memory type should be available at all times to enable maintenance programming.

- Stack usage. Designers should measure the size of worst-case stack allotment. A minimum of 20 percent of stack allocation should be available for maintenance programming.

- Execution time for each interrupt service routine.

- Overhead usage. Developers should determine the worst-case total interrupt execution time as a percentage of a real-time clock interval.

- Buffer Usage. Determine the worst case “high water” mark for each buffer used in the system to make sure 20 percent is available for maintenance programming.

- Timer service overruns.

An alpha field trial is an engineering field trial, not a customer or field support field trial, which includes all elements of the system. It usually is performed prior to software completion, but after the high priority and risk use cases are implemented. Its purpose is to test the product to uncover issues relatively early in the development cycle.

In its best form, alpha field trial is implemented by providing company personnel with product and user instructions. A weekly meeting is held to discuss any questions they have. This provides not only defect detection, but also a usability test of the product and its user documentation.

A beta field trial is a customer (or a field support department) trial conducted in a customer domain. This is performed after all software development is completed. The trial is extended with additional releases if failures should occur. A beta field trial is complete when the customer (or field support department) signs for acceptance of the product.

Maintenance Phase

Maintenance covers the software product support from initial production release to the end of the product lifecycle. The SRD and SAS artifacts from the analysis and design process phases are critical to the cost and quality of maintenance modifications made to the software during this period. They are documents that a maintenance programmer can rely on being accurate and up to date at all times. The same software development phases used in the initial development apply to the maintenance activity.

This article was written by Neil Frederickson, senior software engineer, at ISSPRO Inc., Portland, Oregon. For more information, contact Frederickson at