The efficiency of computer chips has increased by using caches — small, local memory banks that store frequently used data and cut down on time- and energy-consuming communication with off-chip memory.

Today's chips generally have three or four different levels of cache, each of which is more capacious, but slower than the last. The sizes of the caches represent a compromise between the needs of different kinds of programs, but it's rare that they are exactly suited to any one program. A system was developed that reallocates cache access on the fly to create new cache hierarchies tailored to the needs of particular programs. The system was tested on a simulation of a chip with 36 cores. Compared to its best-performing predecessors, the system increased processing speed by 20 to 30 percent while reducing energy consumption by 30 to 85 percent.

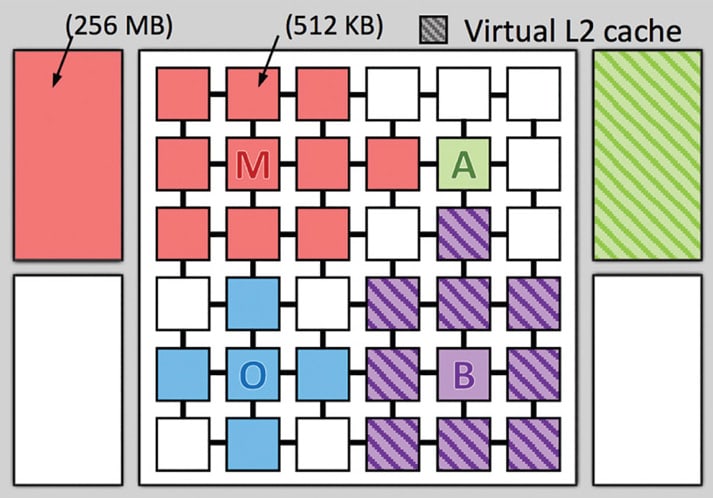

Improvement in computer chips’ processing power has come from the addition of more cores. The chips in most of today's desktop computers have four cores, but several major chipmakers have announced plans to move to six cores, and 16-core processors are not uncommon in high-end servers. Each core in a multicore chip usually has two levels of private cache. All the cores share a third cache, which is broken up into discrete memory banks scattered around the chip. Some new chips also include a DRAM (dynamic random-access memory) cache, which is etched into a second chip mounted on top of the first.

For a given core, accessing the nearest memory bank of the shared cache is more efficient than accessing more distant cores. Unlike today's cache management systems, the new system — called Jenga — distinguishes between the physical locations of the separate memory banks that make up the shared cache. For each core, Jenga knows how long it would take to retrieve information from any on-chip memory bank — a measure known as “latency.”

Jenga builds on an earlier system called Jigsaw, which also allocated cache access on the fly. But Jigsaw did not build cache hierarchies, which makes the allocation problem much more complex. For every task running on every core, Jigsaw had to calculate a latency-space curve, which indicated how much latency the core could expect with caches of a particular size. It then had to aggregate the curves to find a space allocation that minimized latency for the chip as a whole.

Jenga must evaluate the tradeoff between latency and space for two layers of cache simultaneously, which turns the two-dimensional latency-space curve into a three-dimensional surface. That surface turns out to be fairly smooth; it may undulate, but it usually won't have sudden, narrow spikes and dips. That means that sampling points on the surface will give a fairly good sense of what the surface as a whole looks like. A sampling algorithm tailored to the problem of cache allocation was developed that systematically increases the distances between sampled points. Caches with similar capacities — 100 megabytes and 101 megabytes — usually have similar performance, so a geometrically increased sequence captures the full picture.

Once it has deduced the shape of the surface, Jenga finds the path across it that minimizes latency. Then it extracts the component of that path contributed by the first level of cache, which is a 2D curve. At that point, it can reuse Jigsaw's space-allocation machinery. In experiments, this approach yielded an aggregate space allocation that was, on average, within 1 percent of that produced by a full analysis of the 3D surface, which would be prohibitively time-consuming. Adopting the computational shortcut enables Jenga to update its memory allocations every 100 milliseconds to accommodate changes in programs’ memory-access patterns.

For more information, contact Abby Abazorius at