The driverless robotaxis chauffeuring around San Francisco, and the advanced driver assistance features on more than half of new vehicles sold this year show just how far autonomous vehicle technology has come. But to fulfill the promise of level 5 autonomy, cars that drive themselves anywhere under any conditions, experts say we need a new generation of semiconductors.

“Cars have been seen as computers on wheels for a while, but to achieve full autonomy, they need to be more like traveling data centers,” said Valeria Bertacco, the Mary Lou Dorf Collegiate Professor of Computer Science and Engineering. “To make that leap, the auto industry is going to need new materials, architectures, systems, and manufacturing processes for chips that are faster, cheaper, lower-power, and more durable.”

University of Michigan researchers are working with global industry leaders to reimagine AV computing systems. The effort is supported by $10 million from the state of Michigan to the Michigan Semiconductor Talent and Technology for Automotive Research (mstar) initiative, which also includes imec, KLA, the Michigan Economic Development Corporation, Washtenaw Community College, and General Motors.

Automated vehicles require immense computational power that grows exponentially with each level of autonomy. “This is a constrained, embedded system,” said Reetuparna Das, Associate Professor of Computer Science and Engineering. “The need for more efficient AI hardware is very well understood. It’s a billion-dollar market.”

Last year, semiconductor industry representatives toured the Mcity Test Facility and looked to the horizon. “It’s great to see people coming together to address these aspects,” Michael Sun, who leads the automotive business development unit at Taiwan-based TSMC, said. “I’ve been working in this area for a long time, and I think there’s a lot of momentum right now.”

The deep learning network models in today’s AVs loosely mimic the structure of a biological brain, but they operate much less efficiently. They work by processing a continuous stream of unfiltered data. U-M researchers are developing an approach that mimics brain behavior, rather than structure. It keys in on contrast, motion, and sudden events, much like our brain and eyes work together to filter and focus our attention.

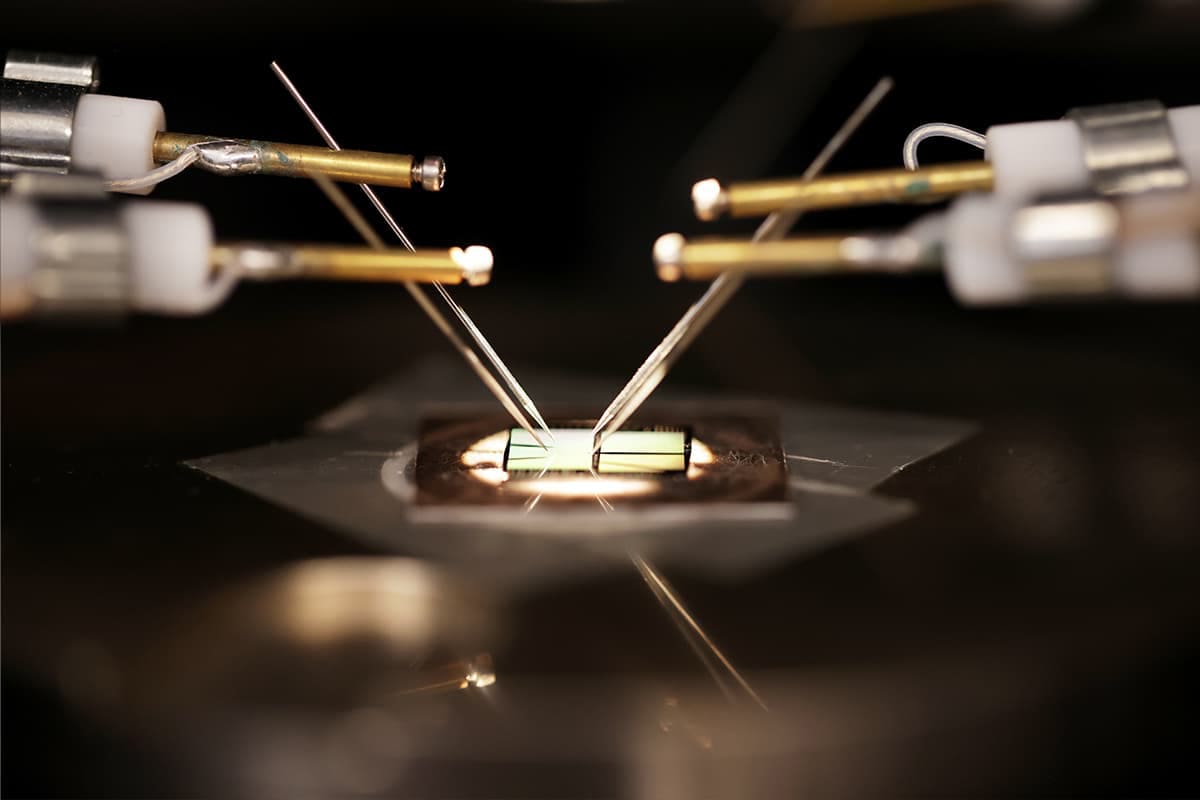

They’re testing a more efficient processor called a neuromorphic chip based on their tungsten oxide memristor technology, and a “spiking neural network” companion algorithm. “Neuromorphic sensors don’t capture frames like conventional cameras do. Instead, they detect change in each pixel independently,” said Wei Lu, the James R. Mellor, Professor of Engineering in Mechanical Engineering.

While traditional “system on a chip” architecture involves printing all the components on a single piece of silicon, AVs’ computational needs push its limit in terms of physical size and complexity. The chiplet approach involves smaller, modular components that could be mixed and matched on a circuit board to build more tailored, durable systems. U-M researchers are developing a more robust chiplet communication protocol that can operate for years in a moving vehicle. “Not only does chiplet communication need to be robust, but it also needs to be efficient in terms of energy and bandwidth,” said Mike Flynn, the Fawwaz T. Ulaby Collegiate Professor of Electrical and Computer Engineering. “We’re trying to make it high-speed and low-power too.”