There are certain situations when landing an Advanced Air Mobility (AAM) aircraft is required to be performed without assistance from GPS data. For example, AAM aircraft flying in an urban environment with tall buildings and narrow canyons may affect the ability of the AAM aircraft to effectively use GPS to access a landing area. Incorporating a vision-based navigation method, NASA Ames has developed a novel Alternative Position, Navigation, and Timing (APNT) solution for AAM aircraft in environments where GPS is not available.

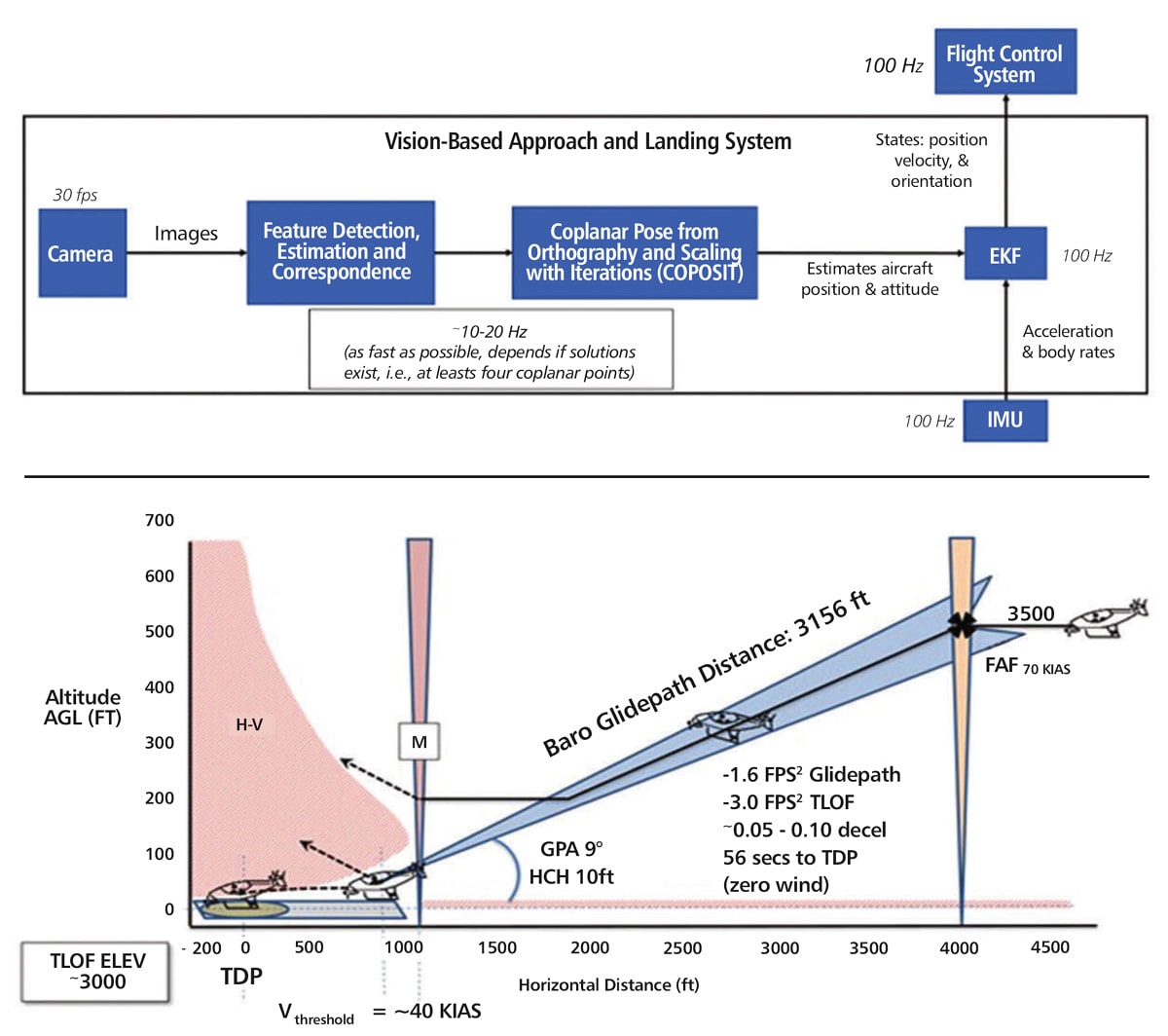

The technology employs an aircraft-mounted camera to visually detect known features in the landing area, using a computer vision method called Coplanar Pose from Orthography and Scaling with Iterations (COPOSIT) to determine the position and orientation of the aircraft relative to the features detected from the camera images, and an extended Kalman filter to predict and correct the estimated position, velocity, and orientation of the aircraft.

The novel Vision-Based Approach and Landing System (VALS) operates on multiple images obtained by the aircraft’s video camera as the aircraft performs its descent. In this system, a feature detection technique such as Hough circles and Harris corner detection is used to detect which portions of the image may have landmark features. These image areas are compared with a stored list of known landmarks to determine which features correspond to the known landmarks.

The world coordinates of the best matched image landmarks are inputted into a Coplanar Pose from Orthography and Scaling with Iterations (COPOSIT) module to estimate the camera position relative to the landmark points, which yields an estimate of the position and orientation of the aircraft.

The estimated aircraft position and orientation are fed into an extended Kalman filter to further refine the estimation of aircraft position, velocity, and orientation. Thus, the aircraft’s position, velocity, and orientation are determined without the use of GPS data or signals. Future work includes feeding the vision-based navigation data into the aircraft’s flight control system to facilitate aircraft landing.

NASA is actively seeking licensees to commercialize this technology. Please contact NASA’s Licensing Concierge at