While working with deaf students for more than a decade and a half, Bader Alsharif, Ph.D. candidate in the Florida Atlantic University Department of Electrical Engineering and Computer Science, saw firsthand the communication struggles that his students faced daily.

Desperately wanting to bridge the gap between them and people who don't know sign language, he and his team decided to use his background in AI and computer engineering to build a smart, real-time American Sign Language (ASL) system.

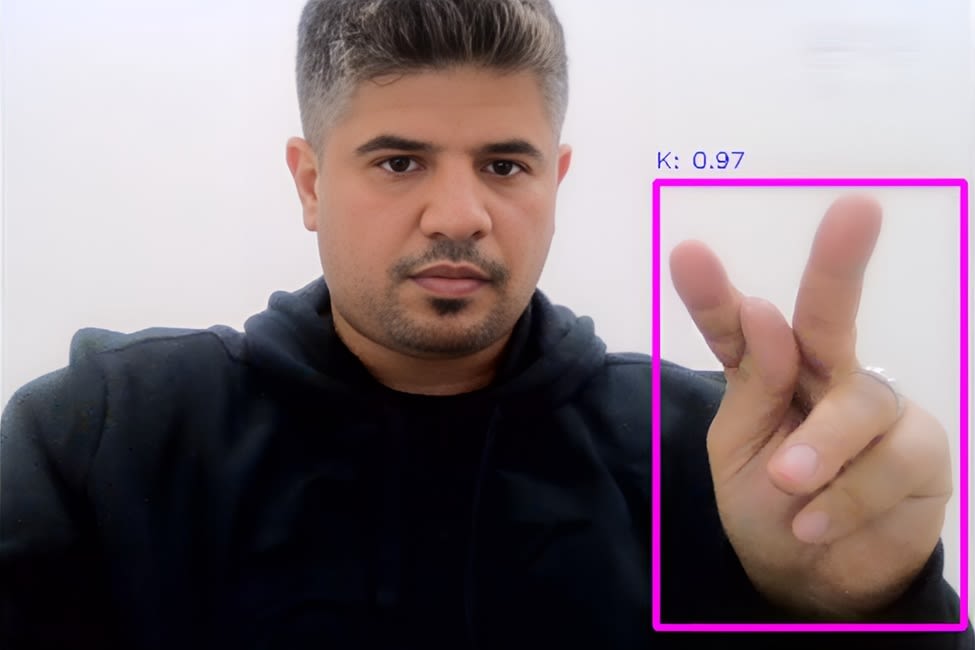

The group conducted a study by developing a custom dataset of more than 30,000 static images of ASL hand gestures. Using MediaPipe, each image was annotated with 21 key landmarks on the hand, providing detailed spatial information about its structure and position.

The annotations played a critical role in enhancing the precision of YOLOv8, the deep learning model the researchers trained, by allowing it to better detect subtle differences in hand gestures.

“You can use a regular webcam to capture your hands, and then MediaPipe tracks those 21 key points in the hand and then passes this information to the model, YOLOv8,” Alsharif said. “The model analyzes these 21 key points, and then perfectly recognizes the hand gesture used to present letters or words. So, the system displays after that the recognized letter words on the screen as a text.”

The study’s results revealed that by leveraging this detailed hand pose information, the model achieved a more refined detection process, accurately capturing the complex structure of ASL gestures. Combining MediaPipe for hand movement tracking with YOLOv8 for training resulted in a powerful system for recognizing ASL alphabet gestures with high accuracy.

The model performed incredibly well. It boasted an accuracy of 98 percent, the ability to correctly identify gestures at 98 percent, and an overall performance score (F1 score) of 99 percent. In addition, it achieved a mean Average Precision (mAP) of 98 percent and a more detailed mAP50-95 score of 93 percent.

“The biggest challenge was the lack of a clean, unbalanced dataset,” Alsharif said. “Most existing datasets were either too small, had one sign up, or didn't cover all the lightening and background conditions. We had to spend around one year cleaning the dataset, making sure every SCL [semantic classifiers letter] had enough samples. I think we have 5,000 per sample, which is more than enough. All this to avoid the bias between letters.

“So, the second obstacle we faced was how to find the right AI parameters. To get high accuracy required a lot of experimentation with model setting, especially for YOLOv8 and MediaPipe, which I already combined to achieve this project. So, we needed to optimize everything from image size, learning grade, keyboard tracking, and do the calculation to make the system work in real time more accurately,” he added.

According to Alsharif, the team is now focusing on expanding the system's capabilities from recognizing individual sign language letters to interpreting sign language sentences.

“That’s what we were working on, because we are examining now three different types of models: the transformers, recurrent neural networks, and then temporal confessional networks,” he said. “So, we are between these three models, which can understand the sequence images.”

Alsharif also noted that this project is not just about technology — it’s about inclusiveness and empowerment for deaf students and the community in general.

“I hope this inspires more developers and researchers to use AI for social good,” he added. “Finally, I would like to thank Florida Atlantic University for providing this diverse environment where students can exchange knowledge and experience, and for the continuous support they have given me from the beginning. I shared my goals with them, and then they guided me on how to achieve this goal by recommending the right courses.”

This article was written by Andrew Corselli, Digital Content Editor, SAE Media Group. For more information, visit www.fau.edu.