While the average pampered pup at home may lounge on the couch and demand belly rubs, the robotic dogs being created at Arizona State University are stepping up to take on some of the world’s most dangerous tasks.

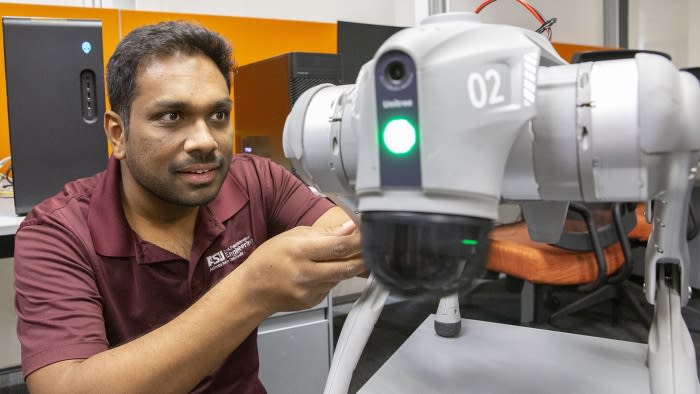

Meet the Unitree Go2. This agile, quadrupedal robot is designed for more than simply fetching sticks. It’s equipped with advanced artificial intelligence cameras, LiDAR and a voice interface, and it’s learning how to assist with everything from search-and-rescue missions to guiding the visually impaired through complex environments.

Ransalu Senanayake, an Assistant Professor of Computer Science and Engineering in the School of Computing and Augmented Intelligence, part of the Ira A. Fulton Schools of Engineering at ASU, is leading the research team out to prove that some heroes have both circuits and tails.

The team is working on multiple projects using the Unitree Go2 robotic dog. One of the lab’s most exciting projects comes from Eren Sadıkoğlu, a student pursuing a master’s degree in robotics and autonomous systems and developing vision and language-guided navigation tools to help the robotic dog to perform search-and-rescue missions.

“Our goal is to equip students with both the theoretical foundations and practical skills needed to tackle the challenges of tomorrow,” Ross Maciejewski, Director of the School of Computing and Augmented Intelligence, said. “By blending AI with robotics, we are not only expanding the capabilities of technology but also creating future leaders who will bring transformative solutions to critical issues.”

Here is an exclusive Tech Briefs interview, edited for length and clarity, with Senanayake.

Tech Briefs: What was the biggest technical challenge you faced while developing the Unitree Go2 robotic dog?

Senanayake: We were trying to run some deep learning algorithms. Since it's new hardware and different learning models come in different sizes and different latencies, so picking the right one that served our purpose wasn't straightforward. We had to complete a few experiments and figure out what we wanted.

Tech Briefs: Can you explain in simple terms how Unitree Go2 works, please?

Senanayake: Unitree Go2 is a quadruped robot. It has its own GPUs, which is required for running all the deep learning models in parallel. We can think of it like it's just another computer, a powerful computer, but with some embodiment. It has links and it can sense everything. So, the sensors — we collect data from the environment from different sensors, cameras, LiDARs for understanding the depth. And there's a microphone. Then we compute everything inside, run our AI algorithms inside, then send signals to motors. How to control it, what to do.

Tech Briefs: Can you talk about some of the projects your lab is working on? For example, the post-disaster rescue and the assisting the visually impaired?

Senanayake: We added a bunch of different things with the same robot. Because as I say, it's just a computer, but it is more active. So, we can use it for multiple purposes. One is the assistive robot support dog. My undergraduate student, Riana Chatterjee, is working on that. She's trying to help visually impaired people understand the environment. For instance, when we go to an exhibition hall, that person might need to find a place for coffee. So, when they say, “Can we find a place with coffee?” it looks around and tries to detect what's out there. That's one aspect.

The other aspect is how to safely navigate in different environments so that it can assess different threat levels, figure out where are the safe areas to walk, then signal that to the visually impaired person.

Tech Briefs: Do you have any set plans for other work with Unitree Go2? If not, where do you go from here? What are your next steps?

Senanayake: This basic setup helps us with multiple other research; this is just one application. These applications really help us understand what research problems we should be working on. There's a lot of work. One thing is how to make these robots much more user-friendly for humans with visual impairment.

The other line of work is more about search and rescue. Especially internal environments, say inside buildings or something, in case of an earthquake or other disaster. Or, in certain cases, if there are chemical spills — it's not safe for humans to go.

So, how can we send these robots to understand the environment? Another good thing about this kind of robot is because they're tiny, they can go to places even a human sometimes cannot reach.