In the world of automation, both industrial and collaborative robots have proven invaluable in improving efficiency, repeatability, and safety. However, many robots in use today still operate in what can be considered a “blind” mode. They follow pre-programmed paths and rely on precise fixturing and basic sensors for feedback. This can be especially limiting in dynamic environments where parts shift or change. When that happens, the robot’s process is interrupted, requiring user intervention for recalibration or mechanical retooling. It’s a problem rooted in the lack of machine vision.

Without integrated vision, robots can only perform tasks in precisely the same way every time. If a part is even slightly out of position or rotated differently, the robot may fail to complete its task, or worse, cause an error in the process. In such cases, human operators are forced to step in, realign parts, adjust fixtures, or completely reprogram the robot’s motion. This results in costly downtime and limits the robot’s flexibility in adapting to new tasks in the workflow.

Industrial vs. Collaborative Robots

Before diving deeper into vision integration, it’s important to distinguish between industrial and collaborative robots, as their use cases and physical characteristics vary significantly.

Industrial robots are the heavy-duty machines you typically see in settings such as automotive assembly lines, electronics manufacturing, metal fabrication, and aerospace production. They are fast, powerful, and fixed in place. These robots are capable of handling heavy materials, which also makes them potentially dangerous to human operators.

To ensure safety, industrial robots are usually enclosed within guarded zones, surrounded by fences or protected by light curtains to prevent any human from entering the working area during operation.

Collaborative robots, or cobots, are quite the opposite. Designed to work alongside humans, cobots are lighter, slower, and operate with a much lower payload. Their reduced speed and force make them safer to operate. When set up correctly with limited force and speed defined by safety standards, and without sharp tools attached to end effectors, cobots can operate without physical guarding.

Cobots are easier to move, redeploy, and adapt to different tasks in a production environment. But with that flexibility comes a new challenge. When a cobot is moved to a new location or changes the parts it’s handling, it typically has to be reconfigured or re-taught. That is unless it has vision capabilities.

Machine Vision

Without machine vision, robots rely on precise fixturing, basic sensors, and programming. If anything changes, the entire process must be recalibrated manually. For instance, consider a scenario where the robot is programmed to pick up one type of smartphone, and now the factory needs it to handle a slightly different model with a different shape or geometry. Even this minor change can throw off the system, requiring updated code or retooled hardware.

Machine vision solves this problem by enabling the robot to “see” the part. This allows it to identify not only its location, but also its orientation. When properly calibrated, the vision system converts what it sees (in pixels) into real-world measurements. This gives the robot precise information about where to move in three dimensions, as well as how to orient itself (roll, pitch, yaw) to correctly grasp the part.

With vision, the robot no longer must rely solely on mechanical precision. Instead, it can adapt dynamically. This allows it to switch between different parts, layouts, or processes without needing extensive reprogramming or new fixtures.

The Complexity of Early Vision Integration

Although machine vision has been around for some time, integrating it with robotics used to be complicated and tedious. It wasn’t always as seamless as it is becoming today. In the past, robots and cameras often came from different manufacturers, each with their own communication protocols, programming languages, and calibration methods. To get these systems to work together, integrators had to perform several tasks, starting with establishing communication between the robot and the vision system, often using protocols like TCP/IP, Ethernet/IP, Modbus, or proprietary APIs.

Additionally, they would need to program each system separately, in different languages, and coordinate commands between them. They needed to manually fixture cameras in specific positions within the environment, separate from the robot. Finally, integrators were forced to calibrate coordinates between the camera’s pixel-based view and the robot’s physical workspace.

This created a fragile system. If the robot moved, the calibration would often need to be completely redone. It also meant that even small changes in the environment could disrupt the process and add hours or days to the setup time.

Modern Integration

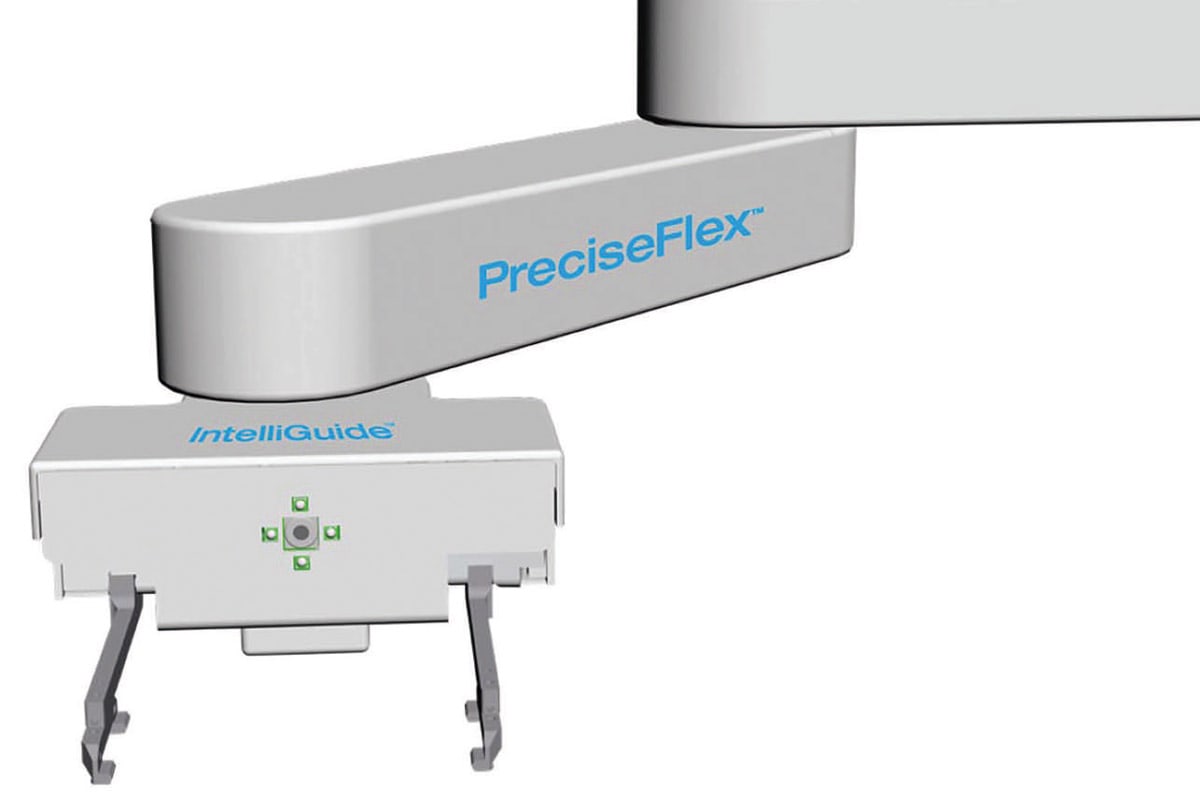

Today, many collaborative robots offer options for integrated cameras mounted directly on the end-effector. These integrated systems include not only front-facing and downward-facing cameras, but also built-in lighting to improve image quality and ensure consistent results in various lighting conditions.

These cameras work together to enable better calibration and 3D perception. By combining multiple views, the robot can accurately perceive its workspace and calculate part position in 3D space.

In many systems, fiducial markers are used to make calibration easier. They help the robot convert pixel-based measurements into real-world coordinates while compensating for lens distortion.

By having the cameras directly on the robot and using fiducial markers for automatic calibration, the system becomes much more portable and adaptive. Moving the robot to a new location no longer requires extensive recalibration. The robot can quickly reorient itself in the new environment and quickly register its position relative to know markers and resume operation with minimal setup.

Another significant advancement is the rise of graphical programming environments. Many modern robotic systems now offer drag-and-drop graphical interfaces that allow users to program robot behaviors without writing complex code.

Instead of writing scripts or using specialized programming languages, users can drag and drop predefined actions, use guided wizards for calibration and setup, and make changes on the fly without deep technical training.

This dramatically lowers the barrier to entry and speeds up deployment.

Industry Applications

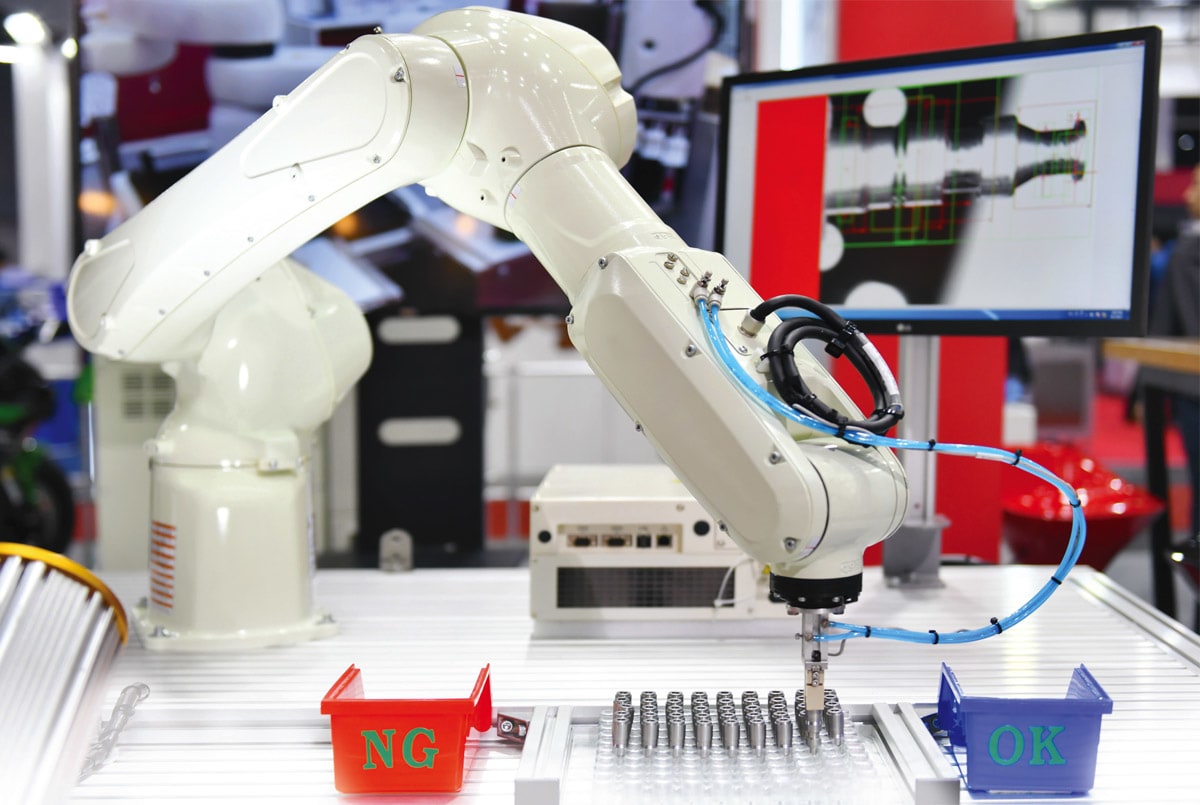

Vision-guided collaborative robots are already being used in a variety of practical applications. For example, they’re seen in random bin picking. In this application, robots can identify and retrieve parts that are jumbled together in unpredictable orientations.

They are also used in rotational alignment, ensuring parts are correctly rotated before assembly or placement. Vision-guided cobots are also great for barcode reading, part presence detection, product identification, measurement, and inventory tracking.

These applications highlight the flexibility and intelligence that vision brings to collaborative robotics. The vision enables them to do much more than repeat the same motion over and over.

AI Advances

As powerful as current vision-guided robots are, AI will take them even further. One of the most promising developments is the concept of self-learning through repetition. Robots can finetune parameters, adjust motion trajectories, and recognize errors more intelligently, reducing the need for manual adjustment or reprogramming.

AI also allows robots to handle tasks that are too complex for traditional vision systems. For instance, inspecting a package that should contain multiple items in any orientation. Traditional vision systems may fail if items overlap or obscure one another. But AI can be trained on many examples of “good” and “bad” configurations, learning to recognize acceptable variability and still identify defects.

By combining vision with AI, robots will be better equipped to operate in unstructured environments, identify complex patterns, and make intelligent decisions based on what they see.

Vision integration is revolutionizing collaborative robotics. By enabling robots to see and adapt to their environment, manufacturers gain the flexibility to automate more processes, with less complexity and lower cost. Combined with low-code programming environments and emerging AI capabilities, vision-guided cobots are not only more powerful, they are becoming more autonomous. The future of automation isn’t just about faster robots. It’s about smarter, more flexible systems.

This article was written by Luck Chand, Applications Engineer, Valin Corporation (San Jose, CA). For more information, visit here .