Dominant element-based gradient descent and dynamic initial learning rate (DOGEDYN) is a method of sequential principal component analysis (PCA) that is well suited for such applications as data compression and extraction of features from sets of data. In comparison with a prior method of gradient-descent based sequential PCA, this method offers a greater rate of learning convergence. Like the prior method, DOGEDYN can be implemented in software. However, the main advantage of DOGEDYN over the prior method lies in the facts that it requires less computation and can be implemented in simpler hardware. It should be possible to implement DOGEDYN in compact, low-power, very-large-scale integrated (VLSI) circuitry that could process data in real time.

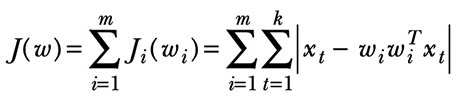

where m is the number of principal components, k is the number of sampling time intervals (the number of measurement vectors), xt is the measured vector at time t, and wi is the ith principal vector (equivalently, the ith eigenvector). The term

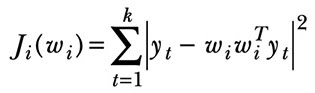

Ji(wi)

in the above equation is further expanded by

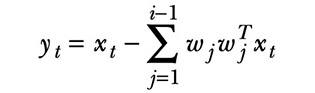

and

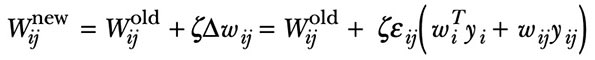

The learning algorithm in DOGEDYN involves sequential extraction of the principal vectors by means of a gradient descent in which only the dominant element is used at each iteration. Omitting details of the mathematical derivation for the sake of brevity, an iteration includes updating of a weight matrix according to

where wij is an element of the weight matrix and ζ is the dynamic initial learning rate, chosen to increase the rate of convergence by compensating for the energy lost through the previous extraction of principal components. The value of the dynamic learning rate is given by

where E0 is the energy at the beginning of learning and EI-1 is the energy of the i-1st extracted principal component.

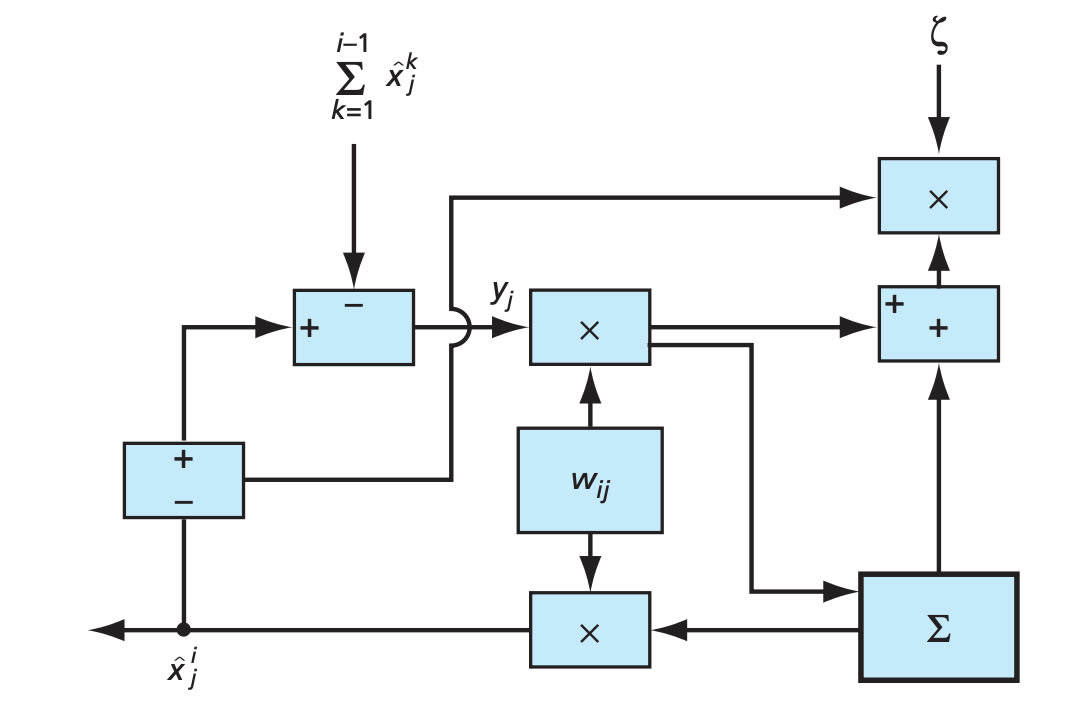

The figure depicts a hardware architecture for implementing DOGEDYN. The raw input data, here denoted xj, are subtracted from the sum of the data previously projected on the previous principal components to obtain yj (which is equivalent to yt as defined above, after appropriate changes in subscripts). The Σ box calculates the inner product of vectors y and wi. The output of the Σ box is summed with the previously computed product of yj and wij and the result multiplied by the dynamic learning rate before updating of wij.

This work was done by Tuan Duong and Vu Duong of Caltech for NASA’s Jet Propulsion Laboratory. For further information, access the Technical Support Package (TSP) free on-line at www.techbriefs.com/tsp under the Information Sciences category. In accordance with Public Law 96-517, the contractor has elected to retain title to this invention. Inquiries concerning rights for its commercial use should be addressed to:

Innovative Technology Assets Management

JPL

Mail Stop 202-233

4800 Oak Grove Drive

Pasadena, CA 91109-8099

(818) 354-2240

E-mail: This email address is being protected from spambots. You need JavaScript enabled to view it.

Refer to NPO-40034, volume and number of this NASA Tech Briefs issue, and the page number.

This Brief includes a Technical Support Package (TSP).

Method of Real-Time Principal-Component Analysis

(reference NPO-40034) is currently available for download from the TSP library.

Don't have an account?

Overview

The document presents a technical overview of a novel hardware architecture for real-time adaptive Principal Component Analysis (PCA), developed by Tuan A. Duong at NASA's Jet Propulsion Laboratory. The primary focus is on optimizing PCA for data feature extraction and image compression, addressing the limitations of traditional gradient descent methods in terms of convergence and hardware implementation.

PCA is a statistical technique widely used for feature extraction and data reduction, particularly effective for large and redundant datasets. The conventional approach involves calculating the covariance matrix, obtaining eigenvalues, and identifying corresponding eigenvectors, a process that is computationally intensive and challenging for real-time applications. The proposed method simplifies this process, making it more suitable for hardware implementation, particularly in System-On-A-Chip (SoC) designs.

The paper details the performance of the new algorithm through two case studies: data feature extraction and image compression. The results demonstrate that the proposed technique can extract principal components from datasets with performance comparable to traditional PCA methods implemented in MATLAB. However, the innovation lies in its simplicity and efficiency, which allows for easier hardware integration.

The document also compares the new approach, referred to as DOGEDYN (Dominant Element Based Gradient Descent with Dynamic Initial Learning Rate), with existing techniques. It shows that DOGEDYN requires significantly less hardware and offers a more straightforward system architecture, enabling reliable learning and real-time processing. The study includes a comparison of component extraction across different techniques, highlighting the advantages of DOGEDYN in terms of learning efficiency and hardware requirements.

In conclusion, the research emphasizes the potential of the DOGEDYN technique for real-time applications, particularly in hyperspectral data classification and compression. The findings suggest that this innovative approach can facilitate the extraction of principal component vectors in a fully parallel manner, making it a valuable contribution to the field of data processing and aerospace technology. The work has implications for various applications beyond aerospace, potentially benefiting industries that rely on efficient data analysis and processing.