Industrial operations often depend on the expertise of a handful of highly skilled operators. These experts accumulate years — sometimes decades — of tacit knowledge about processes that are too variable or complex to be fully automated. Their intuition allows them to balance competing objectives, adjust to unpredictable conditions, and keep production running at quality standards.

This reliance on individual expertise poses a challenge as the industrial sector faces retirements, workforce turnover, and the growing demand for higher levels of automation. The challenge isn’t in capturing operator expertise itself, but in encoding the experts’ nuanced decision making into adaptable and explainable automation solutions that scale their expertise.

Historically, engineers have relied on a mix of manual expertise and automation to manage these complex industrial processes. Each approach has strengths, but none is sufficient on its own.

Manual control remains the most reliable fallback, but it comes at a cost. Operators must juggle dozens of inputs at once, making constant trade-offs to keep systems stable. Performance varies with individual experience, and scaling expertise across a workforce is difficult.

Consider gob formation in glass manufacturing — the first key step in producing glass bottles. The process requires managing inputs from more than 90 sensors and coordinating 60 control actions in real time. It typically takes a human operator over a decade to master this system and has never been automated due to its level of complexity.

Traditional automation has clear limitations as well. Closed-loop controllers, such as PID systems, perform well in stable, single-variable environments but struggle when conditions shift or objectives conflict. Rule-based automation can encode straightforward heuristics but quickly grows brittle as exceptions multiply. In recent years experimental AI control systems have begun to offer increased adaptability, but they often operate as “black boxes” with no ability to translate their algorithmic decision-making into a format humans can consume. This makes iterative improvements difficult and leaves engineers uneasy at the lack of explainability.

The result is a persistent gap: Processes either cannot scale because they rely too heavily on human intuition, or they sacrifice efficiency under the rigid constraints of existing automation.

What’s needed is a way to capture the judgment of experienced operators and combine it with modular, explainable forms of automation that can be composed into larger systems.

Multi-Agent Systems

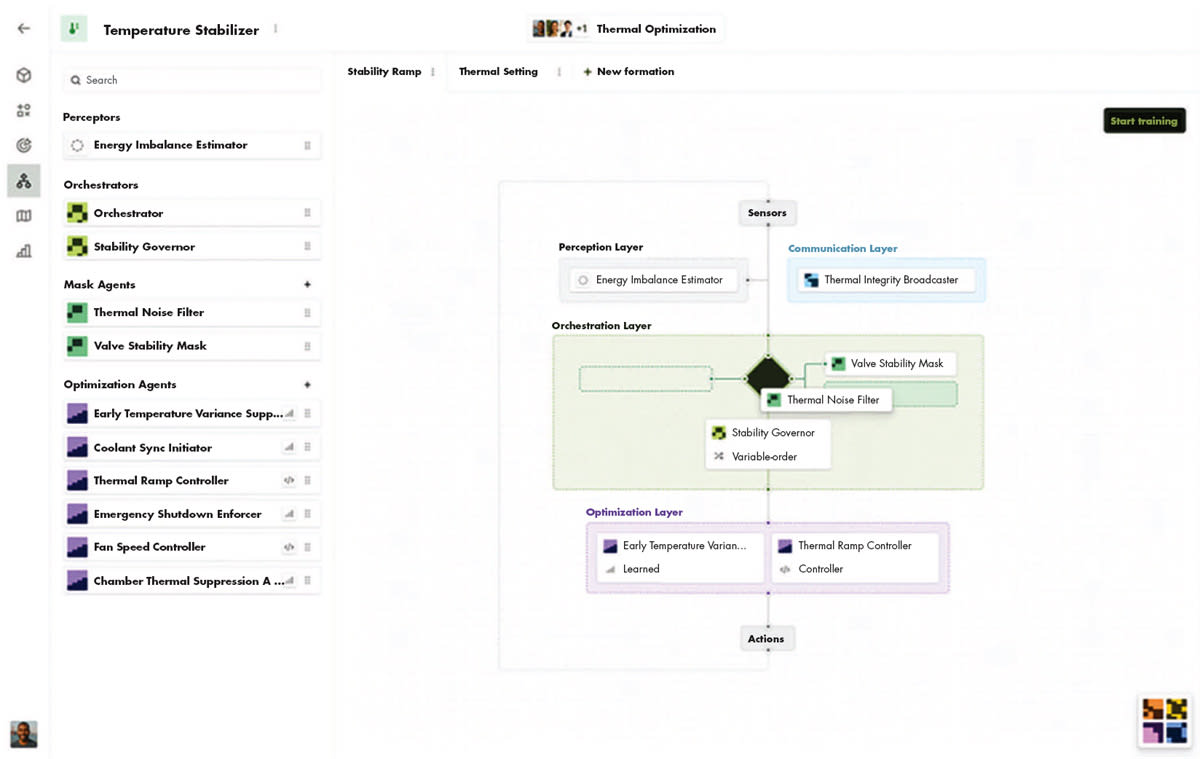

Machine teaching provides that framework. Instead of training an opaque model on raw data, engineers decompose a process into skills that reflect how experts actually work. Some of these skills involve complex perception — noticing and interpreting the tone of a motor, the pattern of a flame, or the rhythm of a line that signals when something is off. Others involve matching control strategies to scenarios: For example, in chemical manufacturing, experienced operators treat the same reactor differently depending on whether it is in startup, steady-state production, or cool-down. Each phase requires a distinct set of strategies, and the ability to switch between them is learned through years of practice.

The best way to capture these skills in an industrial AI system is to create a multi-agent system: a team of agents where each agent specializes in a single expert skill. Going back to the glass manufacturing example, the expert operator described seven distinct control strategies he used depending on the situation. Those strategies became the building blocks of a multi-agent system, each encoded as a modular skill aligned to goals and constraints.

The result is automation that scales expertise without losing transparency, adaptability, or human logic.

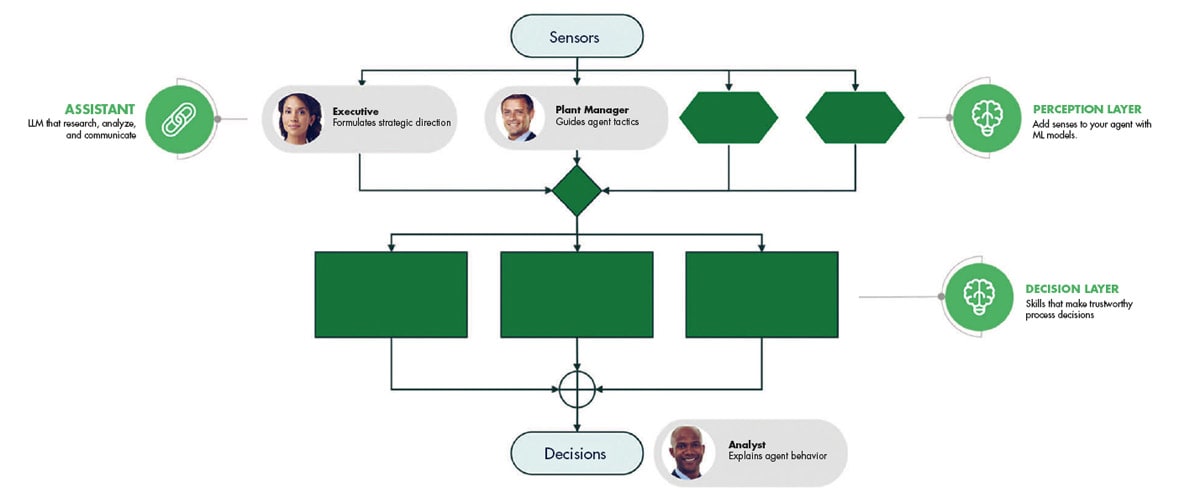

Multi-agent systems built through machine teaching share a common structure. At a high level, they resemble classical control architectures, but with modularity and adaptability designed in from the start.

These systems operate as a feedback loop. At each time interval, sensors gather data from the process. That data is interpreted by a perception layer that uses calculations or machine learning to convert raw signals into actionable information — classifying states, detecting anomalies, or predicting near-future outcomes. From there, the specialized agents within the action layer determine and carry out the next move.

Each agent within the team is specialized to handle a particular decision, and the system selects which one (or which combination) to apply based on the situation — like a football coach choosing whether to go with a running or a passing play and sending the right players out to the field accordingly.

Similarly, a multi-agent system designed to operate a drone might have agents that specialize in takeoff and landing — different phases of the process — and also agents that specialize in making rapid turns or keeping the craft stable in windy conditions. In a well-orchestrated system, the “coach” agent known as the orchestrator learns by practicing to ensure that the right agent is making the decisions at the right time.

These agents are defined and trained based on the knowledge of expert engineers and operators. Through structured interviews or ongoing involvement in the design process, their strategies and heuristics become the blueprint that guides how skills are defined, orchestrated, and refined over time.

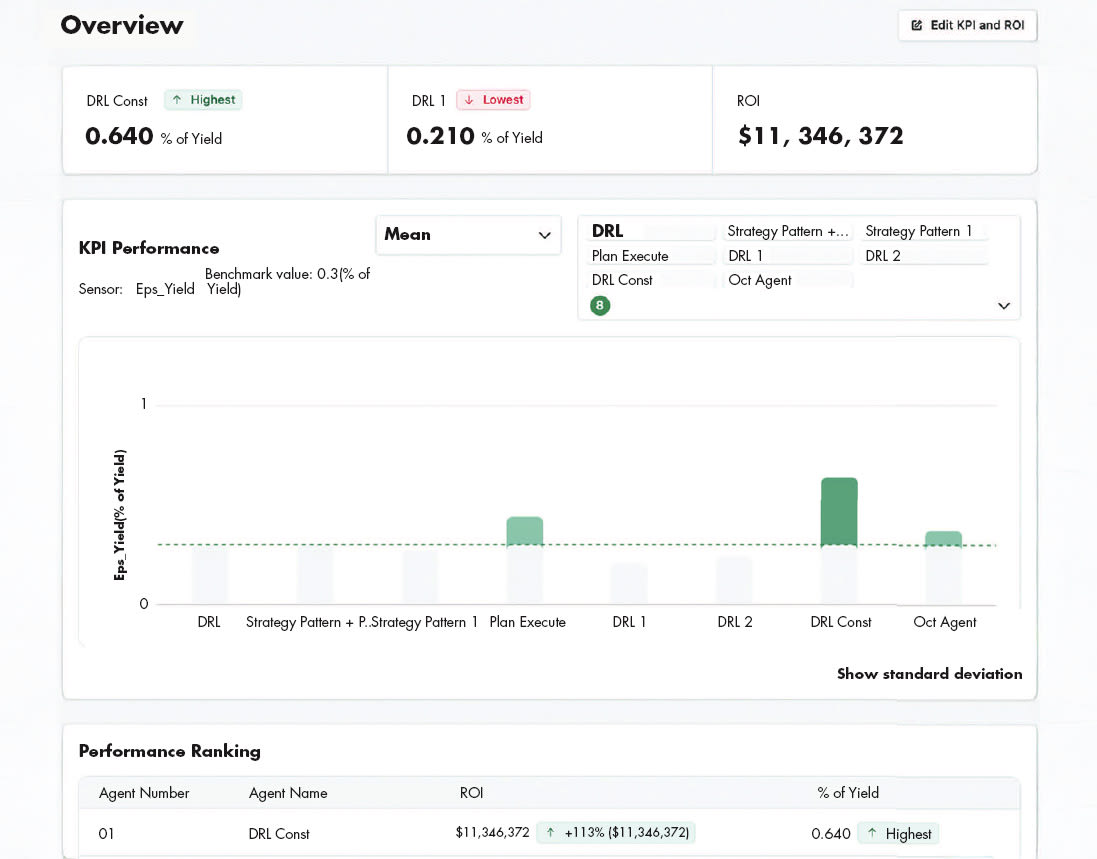

For multi-agent systems to be effective, they need the same kind of practice that a human apprentice would get. In industrial settings, that practice happens first in simulation. Simulation vendors offer physics-based models that can be used to train AI, but often the most effective simulations are instead built from historian data. By using machine learning techniques to transform real data into a simulation that can generate infinite representative scenarios, engineers can create a safe training ground where agents can attempt millions of decisions without risk to production.

Validation by Simulation

The goal in simulation is not only to test performance but to validate that the strategies encoded from operator expertise behave as expected. Each skill is exercised across a range of conditions — steady state, transients, and disruptions — and refined until it consistently meets its goals. Because the simulation environment mirrors the plant’s dynamics, engineers can evaluate how agents cooperate as a system, benchmark them against existing methods, and identify the combinations that yield the most stable and adaptable results.

Once the best-performing systems have been validated in simulation, they can be deployed into production. Integration typically involves connecting the trained multi-agent system to the plant’s existing control infrastructure, where the agents operate in real time alongside human operators. Deployment is staged: systems are first tested under controlled conditions, evaluated against benchmarks, and certified by domain experts before being trusted in live operation.

In the gob formation process, this staged approach enabled the system to take on the 90 sensors and 60 control actions in real time. The operator who had originally defined the strategies oversaw each step of validation, ensuring the agents’ behavior was transparent and consistent with established practice. In some cases, the system even surfaced alternative strategies not previously considered, expanding the operator’s understanding of the process while maintaining quality.

Eventually the most senior operator determined that the system was capable of closed-loop control of the process, and he certified it as an expert, just like he would have for a human. But while fully autonomous control was an option, the company chose a decision-support deployment model, with the AI agents making suggestions to human operators who were still in the loop, allowing all operators to perform at the level of the most skilled.

Comparable outcomes have been demonstrated across a range of industrial domains:

Aluminum can production: A multiagent system supported operators by stabilizing production lines and improving efficiency where expert staffing was a persistent challenge.

Production scheduling: A multi-agent system balanced throughput and adaptability, managing fluctuating demand and resource constraints more effectively than traditional optimizers.

Food extrusion: A multi-agent system captured expert heuristics to stabilize quality, reduce variability, and improve product consistency.

Oil drilling: A multi-agent system embedded the strategies of drilling engineers, optimizing a process that previously depended almost entirely on human judgment.

Across these applications, the pattern is consistent: Human expertise is engineered into autonomous systems that scale knowledge, preserve judgment, and extend operator impact across the enterprise.

Future Outlook

The future of industrial autonomy will not be defined by systems that operate as opaque black boxes, but by approaches that deliberately embed the expertise of the people who know the processes best. Machine teaching makes this possible by making the skills and strategies of expert operators the foundation of AI system design.

When expertise is captured as skills, validated in simulation, and deployed as part of a multi-agent system, it no longer resides only in the memory of a few operators. Instead it becomes a durable part of the automation itself. This is how autonomy advances: not by replacing operators, but by preserving their strategies in systems that can scale across teams, facilities, and industries.

This article was written by Sarah Cohen, Head of Learning, AMESA (Walnut Creek, CA). For more information, visit here .